Visual Odometry

Visual Odometry (VO) figures out a robot's position and orientation by crunching camera images. It's a game-changer for AGVs in spots without GPS, delivering spot-on localization without pricey setups.

Core Concepts

Feature Detection

The algorithm spots standout features—like corners or edges—in each image to create landmarks.

Feature Matching

It tracks those features frame by frame. Matching them shows how pixels shift from the camera's view.

Motion Estimation

From those matched features' geometry, it crunches out the robot's rotation and translation—full 6-DoF motion.

Local Optimization

'Bundle Adjustment' techniques polish the trajectory by slashing reprojection errors over recent frames.

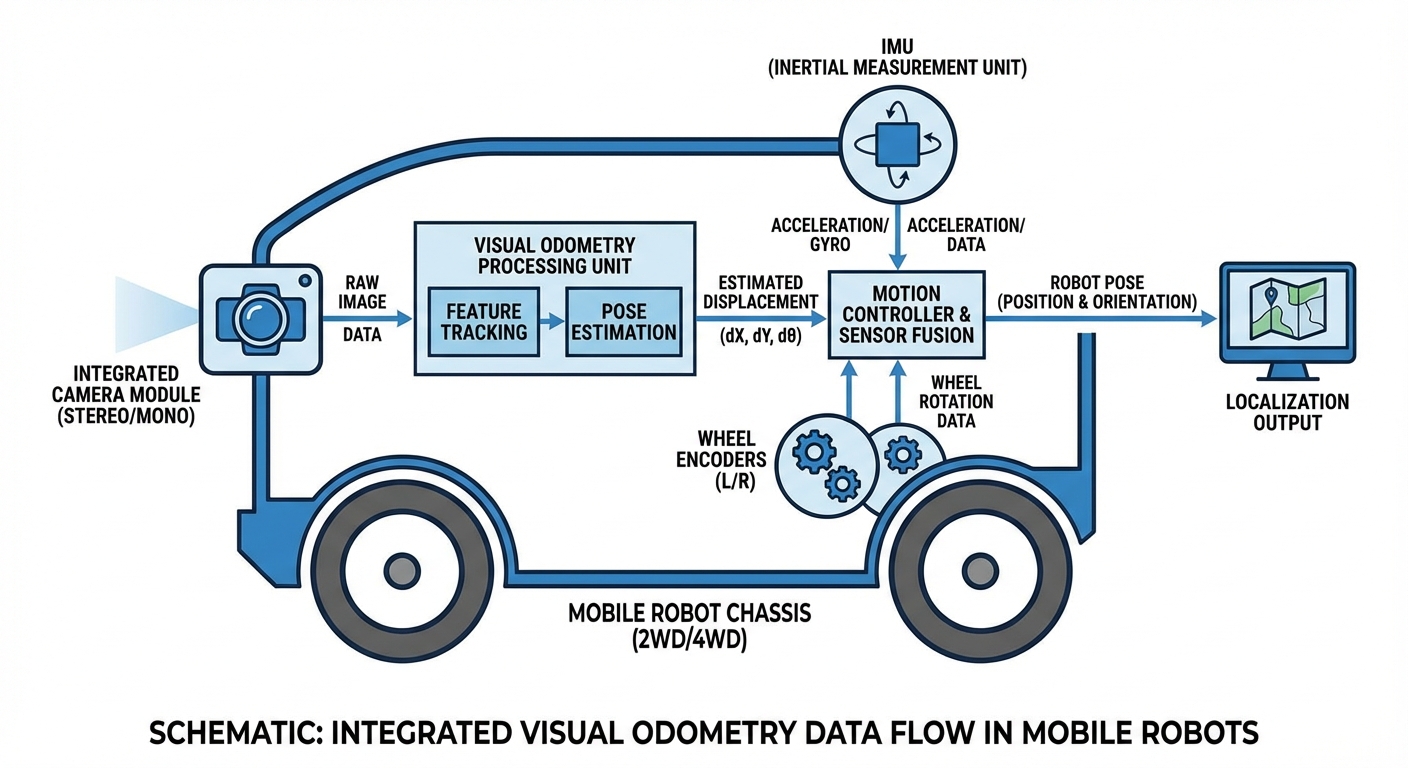

Sensor Fusion (VIO)

For extra toughness, pair VO with IMU data (Visual-Inertial Odometry) to nail fast moves or bland textures.

Drift Correction

VO builds up errors over time, so team it with loop closure or map-based methods to snap back to true position.

How It Works: The Pipeline

Visual Odometry works on 'dead reckoning'—relative changes, not absolute like GPS. It kicks off with the AGV's camera grabbing a steady stream of environment shots.

The onboard computer pulls out sparse features—think high-contrast corners or edges. As the robot rolls, these shift in the camera view. Math on their movement between frame and frame spits out velocity and path.

It all crunches in milliseconds for real-time position updates. Vital for warehouse bots dodging slips in tight aisles where wheels skid and GPS gets blocked by racks and ceilings.

Real-World Applications

Dynamic Warehousing

AGVs with VO handle ever-changing layouts, ditching tape or floor markers.

Service Robotics

Hospital or hotel cleaners and delivery bots use VO for endless corridors where LiDAR sees repeats or nothing.

Last-Mile Delivery

Sidewalk delivery bots lean on VO for tricky outdoor paths and dodging GPS glitches from skyscrapers.

Inspection Drones

Drones checking tunnels, mines, or bridge undersides count on VO to stay steady sans GPS.

Frequently Asked Questions

What sets Visual Odometry (VO) apart from V-SLAM?

VO just tracks trajectory frame-to-frame. V-SLAM builds on that with a full environment map and loop closures to fix long-term drift.

How does Monocular VO differ from Stereo VO?

Monocular VO with one camera misses absolute scale without extras like speed info, so scale drifts. Stereo VO with dual cams triangulates depth for true scale and better accuracy—but it guzzles more compute.

What is "Drift" and how do you manage it?

Drift is error buildup making position estimates wander off reality. We fight it with 'Loop Closure' (spotting old places) or blending VO with LiDAR or rare GPS hits.

Does Visual Odometry work in low-light conditions?

Regular cameras flop in dim light or dark—no features to grab. Add IR lights or HDR sensors to help, but pitch black? LiDAR wins.

Why use Visual Odometry instead of Wheel Odometry?

Wheel odometry slips on bad floors, wear, or unevenness, drifting fast. VO eyes the world directly—no slip issues, but hungrier for processing power.

What hardware is required to implement VO?

Grab a camera (global shutter best to skip blur), a beefy computer like NVIDIA Jetson or Raspberry Pi 4+, and an IMU for fusion (VIO).

How does VO handle dynamic environments with moving people?

Moving stuff fools it if treated as fixed. Smart VO uses RANSAC outliers or semantics to ditch pixels from people or forklifts.

Is Visual Odometry computationally expensive?

Yep, real-time video crunching needs serious CPU/GPU. 'Sparse' VO tracks few points to save juice; 'Dense' maps every pixel but demands top hardware.

What is Visual-Inertial Odometry (VIO)?

VIO fuses camera tightly with IMU (accel + gyro). IMU covers blur-prone speed bursts; camera reins in IMU drift for rock-solid results.

Can VO work in texture-less environments?

VO needs texture—edges, patterns. Blank walls or glass? Trouble. VIO patches that, or use pattern-projecting structured light cams.

Is calibration necessary?

Totally. Nail camera intrinsics (focal length, distortion) and extrinsics (pose on robot) for dead-accurate paths.

How does VO compare to LiDAR-based localization?

LiDAR's more precise, dark-proof, but pricey and power-hungry. Cameras are cheap, read signs, so VO's a smart pick for most AGVs.