Stereo Vision Systems

Give your Autonomous Guided Vehicles (AGVs) true 3D depth perception by copying how human eyes work with binocular vision. Stereo systems deliver affordable, high-res spatial smarts that's perfect for navigating messy, unstructured spaces.

Core Concepts

Binocular Baseline

The physical gap between the two camera sensors. A bigger baseline boosts depth accuracy far away, but it also pushes out the closest distance you can sense.

Disparity Mapping

How much an object's pixel position shifts between the left and right images. Bigger disparity means it's closer to the robot.

Point Cloud Generation

Turning those disparity maps into a full 3D point cloud (X, Y, Z coords) that maps out the surfaces of objects in the robot's way.

Epipolar Geometry

The handy geometry trick that narrows your search for matching points from a full 2D grid down to a simple 1D line along the epipolar lines.

Active Stereo

Systems that shine a textured IR pattern on the scene to help match points on bland surfaces like plain walls or shiny floors.

Calibration

The key step of nailing down the camera's intrinsic params (like focal length and center) and extrinsic ones (rotation, translation) for spot-on measurements.

How It Works: Triangulation

Stereo vision is all about triangulation. Think how your brain uses the tiny differences between your left and right eyes to judge depth—stereo cams snap two images from slightly offset spots at the same time.

The onboard processor spots matching features in both pics. It measures the horizontal shift (disparity) between them, factors in the camera's focal length and baseline, and boom—calculates the for every pixel.

Unlike LiDAR's laser time-of-flight scans, stereo vision spits out dense depth maps perfectly synced with color (RGB) data. That lets AI figure out not just something is, but it is.

Real-World Applications

Logistics & Warehousing

Perfect for spotting pallet pockets and dodging obstacles. Stereo lets forklifts line up dead-on with racks, no matter the lighting.

Last-Mile Delivery

Sidewalk delivery bots use stereo to catch curbs, people, and bumpy ground. Passive stereo shines in bright sun where IR might glitch out.

Agricultural Robotics

In fields, there's tons of texture but tricky shapes. Stereo helps bots weave through crop rows and pick out ripe stuff for harvesting.

Service & Hospitality

Waiter bots lean on stereo to spot tables, chairs, and folks on the move in busy spots, keeping interactions safe and touch-free.

Frequently Asked Questions

What's the big win of stereo vision over LiDAR for AGVs?

Cost and smarts are the stars here. Stereo cams are way cheaper than 3D LiDAR and pair rich color data right with depth—great for classifying stuff, like telling a person from a cardboard box.

Does Stereo Vision work in complete darkness?

Regular passive stereo needs some light to pick out features. But 'active stereo' rigs project an IR pattern to create fake texture, working great even in pitch black.

How does the "baseline" affect the robot's performance?

The baseline's just the distance between the lenses. Wider means sharper depth at distance, but a bigger blind zone up close. Narrow keeps close-range sharp but fuzzes out farther away.

What are the computational requirements?

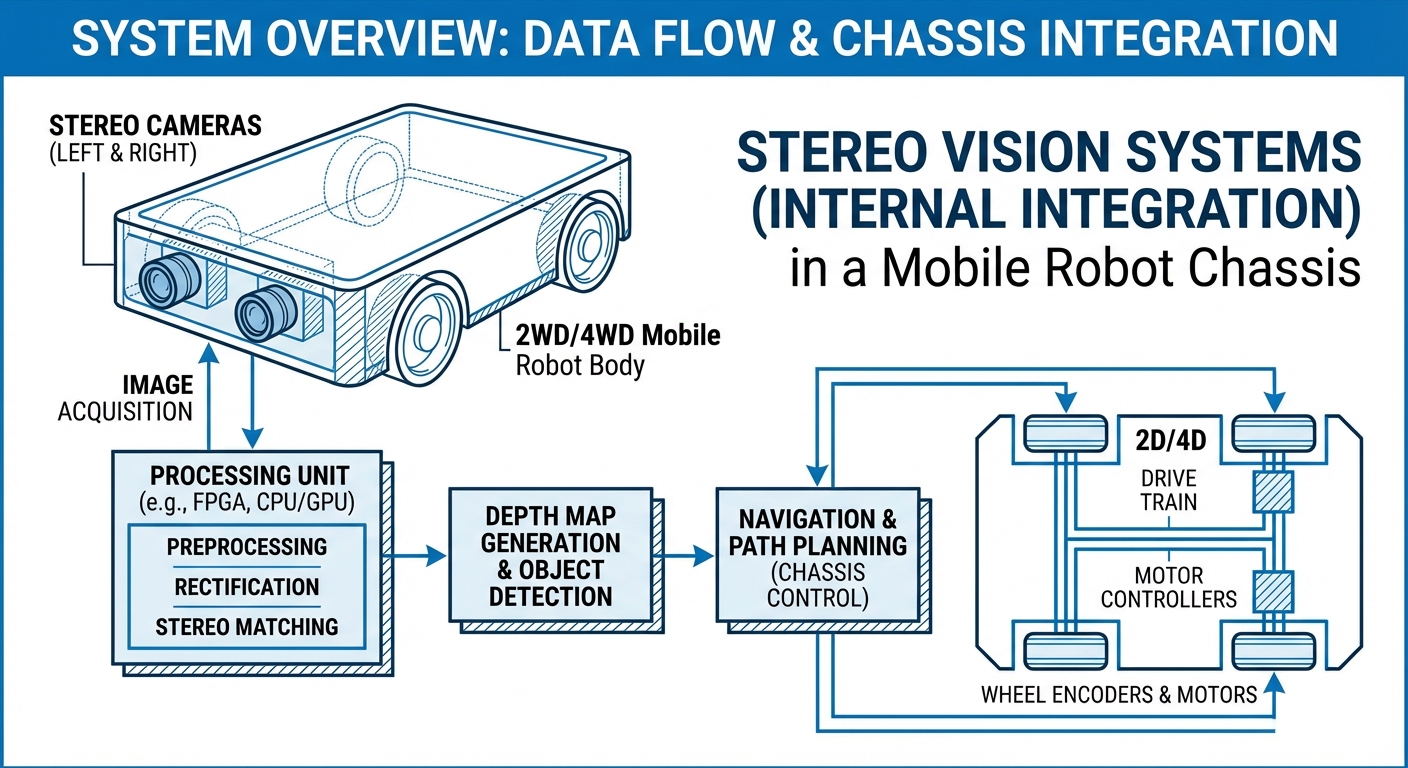

Stereo matching chews up compute power. Modern CPUs can swing it, but most AGV setups use cams with built-in FPGAs or hook to GPUs like NVIDIA Jetson for real-time disparity maps with no lag.

Why does stereo vision struggle with white walls?

Stereo algos hunt for standout features—edges, textures, corners—between left and right views. A blank white wall? No matches, so depth fails unless you add an active projector.

How often does a stereo system need calibration?

Factory calibration should hold up well, but bumps and vibes from AGV life can throw off the lenses. Tough industrial stereo cams use solid metal frames to fight that, but you might recalibrate after a crash.

What is the typical range of a stereo camera?

It varies by baseline and res. A compact robotics cam might nail 0.2m to 5m. Bigger outdoor rover setups? Up to 20m or 30m, but errors grow fast with distance.

Can stereo vision be used for SLAM?

Yep, visual SLAM (vSLAM) loves it. Stereo feeds the 3D layout for mapping while tracking the bot's moves—call it Stereo-Inertial Odometry when you mix in IMUs.

How does sunlight affect stereo vision?

Passive stereo beats ToF or structured light in harsh sun, since sunlight doesn't swamp the image like it does IR. That's why it's top pick for outdoor delivery bots.

Is software integration difficult?

Most new stereo cams have SDKs for C++ and Python, plus built-in ROS/ROS2 love. Stuff like `stereo_image_proc` makes point clouds super easy to whip up.