State Estimation

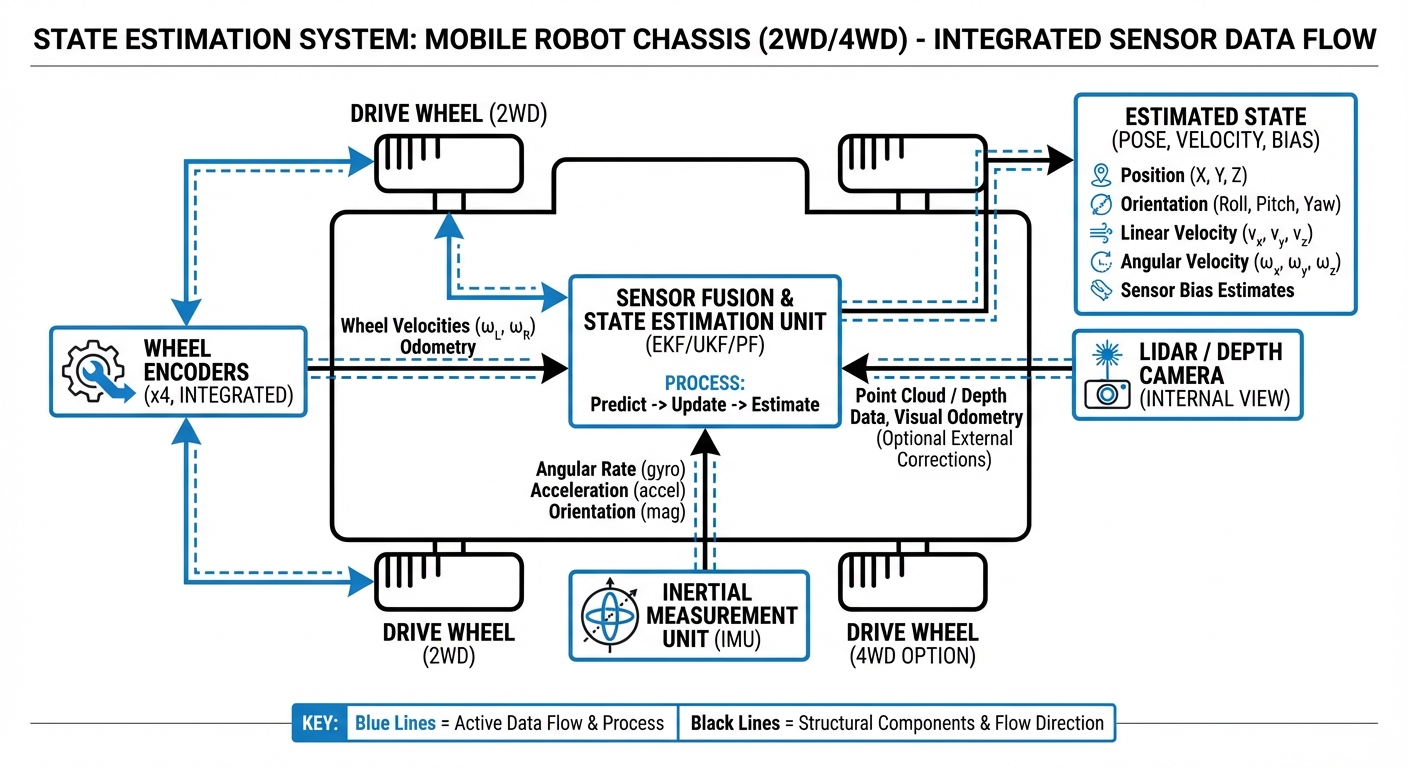

It's the math magic behind autonomous navigation that lets AGVs answer 'Where am I?' in real time. By blending noisy sensor data with control inputs, it delivers spot-on position, orientation, and velocity for safe moves.

Core Concepts

Sensor Fusion

It's fusing data from all sorts of sources—like IMUs, wheel encoders, and LiDAR—to slash uncertainty. The combined result beats any single sensor hands down.

Probabilistic Modeling

Robots never get 100% certainty on their state. We treat position as a probability cloud (think Gaussian), so the system stays smart and safe even with bumpy data.

Odometry (Dead Reckoning)

It's figuring position shifts by tracking wheel rotations. Super accurate short-term, but drift creeps in over time from slippage—needs corrections to stay on track.

The Kalman Filter

The go-to algorithm for linear state estimation. It loops through 'Predict' (where we figure we've moved) and 'Update' (what sensors really see).

Pose (6 Degrees of Freedom)

Full state covers position (X, Y, Z) and orientation (Roll, Pitch, Yaw). For typical warehouse AGVs, we zero in on X, Y, and Yaw—the key 2D pose.

Loop Closure

It's when the robot spots a familiar spot from before. That kicks off a global tweak that fixes up all the drift in the path estimate.

How It Works: The Prediction-Correction Cycle

State estimation spins in a loop hundreds of times a second. It kicks off with , using control inputs (like 'I told the motors to go 1 meter/second') to guess the new spot.

At the same time, the phase pulls in real-world feeds from LiDAR, cameras, or GPS. Spot a wall 2 meters off via LiDAR when prediction said 2.5? It blends a smart average based on each source's reliability (covariance).

You end up with a super-sharp estimate that's better than wheels or sensors alone—perfect for squeezing through tight corridors collision-free.

Real-World Applications

High-Throughput Warehousing

AMRs count on pinpoint state estimation to handle busy spots with people around. Solid localization means cramming shelves closer and narrowing aisles.

Precision Manufacturing

In car assembly, AGVs dock to conveyors with millimeter precision. Sensor fusion nails the alignment for safe heavy-load transfers.

Outdoor Logistics

Crossing between buildings? Robots lose LiDAR features and pivot to GPS/IMU fusion. State estimation smooths the handoff from indoor SLAM to outdoor nav.

Healthcare Delivery

Hospital bots tackle glassy corridors and bland walls. Smart filters ditch reflection noise, ensuring meds land in the right room every time.

Frequently Asked Questions

What is the difference between Odometry and State Estimation?

Odometry's a flavor of dead reckoning using wheel encoders for position shifts. State estimation's the big picture—it grabs odometry as one piece and mixes in LiDAR, IMU, etc., to fix raw odometry's slips and drift.

Why can't we just use GPS for robot localization?

Plain GPS hits 2-5 meter accuracy—too sloppy for warehouse paths or doors. Plus, signals die indoors, so you need local tricks with LiDAR or cameras.

What is the "Hidden Markov Model" assumption in robotics?

It's the idea that tomorrow's state hinges just on today's state and your next move—not your whole backstory. This shortcut makes the math lightweight for real-time onboard crunching.

Kalman Filter vs. Particle Filter: Which is better?

Kalman Filter (especially EKF) is quick and perfect for Gaussian noise, ideal for speed and steady tracking. Particle Filters guzzle more power but shine at 'global localization' (where am I if totally lost?) by juggling multiple possibilities.

How does wheel slip affect state estimation?

Wheel slip fools encoders into fake movement, piling on odometry errors. A solid estimator catches it by checking IMU accel or visuals, dialing back trust in encoders when things get slippery.

What role does the IMU (Inertial Measurement Unit) play?

IMUs pump out fast accel and rotation data. They're gold for short bursts, bridging slow LiDAR/camera gaps, and unbeatable for orientation (pitch/roll).

What is covariance matrix and why does it matter?

The covariance matrix is all about uncertainty in the state estimate. It's not just 'You're at X,Y'—it's 'You're probably in this bubble around X,Y.' Without corrections, that bubble balloons as you roll.

How does State Estimation relate to SLAM?

SLAM (Simultaneous Localization and Mapping) builds on state estimation. While plain estimation uses a known map for position, SLAM crafts the map and nails position inside it—all at once.

What happens in a "Kidnapped Robot" scenario?

That's a kidnapping—robot gets grabbed and relocated. Basic Kalman chokes on the nonlinear jump. Recovery needs global reloc, often via Particle Filters or visual matching.

Is 3D State Estimation necessary for flat warehouses?

Usually not. On flat warehouse floors, we stick to 2D (x, y, yaw). Ramps or rough ground? Go full 6-DOF to stop orientation glitches messing up position.

How computationally expensive is this process?

Basic EKF is feather-light for microcontrollers. But chomping 3D LiDAR clouds for scan matching? That demands beefy CPUs or GPUs on today's AMRs.

How do dynamic obstacles (people, forklifts) affect estimation?

Dynamic stuff like people can block LiDAR views of walls. Pro algorithms scrub moving points from scans first, keeping localization sharp in crowds.