Sensor Fusion

Unlock pinpoint navigation and boosted safety for your mobile robot fleet. Sensor fusion blends LiDAR, cameras, and IMUs into one tough environmental view that crushes single-sensor setups.

Core Concepts

Redundancy

It boosts reliability by having multiple sensors track the same thing. If one fails or gets blocked, the rest keep data flowing for safe operation.

Complementarity

It pairs sensors' unique strengths—like LiDAR's precise depth with RGB cameras' knack for signs and floor markings.

Kalman Filtering

The gold-standard math for fusion. It predicts the robot's future state, then corrects it in real-time using noisy sensor data.

Time Synchronization

Syncing timestamps from different hardware is crucial. A millisecond lag between a moving camera and wheel encoder can wreck localization at high speeds.

Extrinsic Calibration

Nailing the rigid transform between sensors. You need to know exactly where the camera sits relative to LiDAR to accurately link pixels to 3D points.

Localization & Mapping

Fusion powers SLAM (Simultaneous Localization and Mapping). It fights "drift" in odometry by constantly tweaking position with visual or geometric cues.

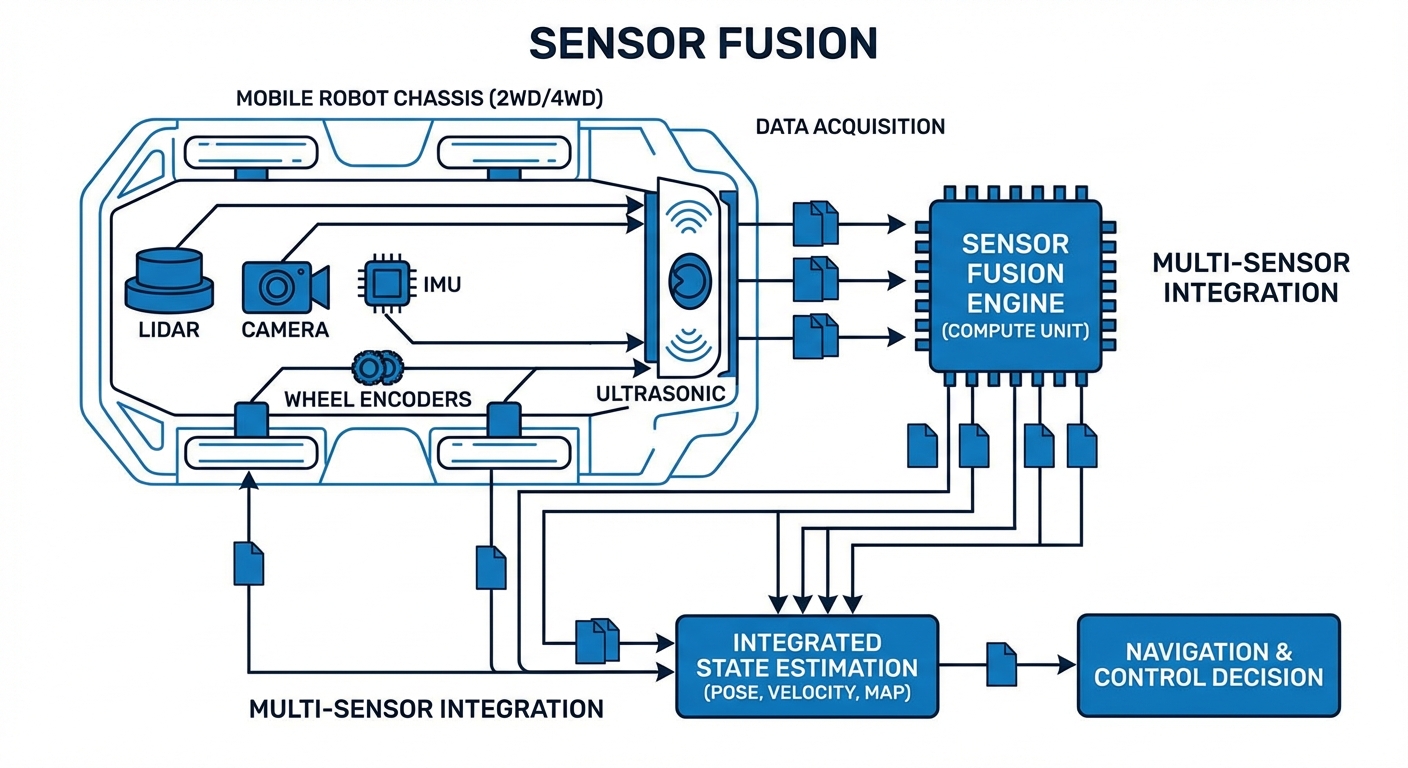

How It Works

Sensor fusion isn't just stacking data—it's probabilistic state estimation. It kicks off by grabbing raw streams: LiDAR point clouds, RGB camera frames, and IMU acceleration vectors.

These streams hit to nix noise and sync timestamps. Then matches features across sensors—verifying the camera's obstacle matches the radar's detection.

Finally, a fusion algorithm (usually Extended Kalman Filter or Particle Filter) blends inputs, weighing them by each sensor's trustworthiness (variance), and outputs a razor-sharp "Best Estimate" of the robot's pose and velocity.

Real-World Applications

Warehouse Automation (AGVs)

In busy fulfillment centers, robots blend wheel odometry with 2D LiDAR to separate fixed racks from moving people—enabling fast, safe zips through shared spaces.

Healthcare Logistics (AMRs)

Hospital delivery robots use visual-inertial fusion to handle long, bland corridors where LiDAR might falter, autonomously delivering meds and lab samples.

Outdoor Material Handling

Yard tractors in rain or fog combine radar (weather-piercing) with LiDAR (shape-defining) to stay operational when optical sensors get hit by conditions.

Precision Docking

For charging docks or conveyor handoffs, robots shift to tight-fusion with short-range IR sensors and precise encoders for millimeter-accurate alignment.

Frequently Asked Questions

Why is sensor fusion necessary for modern AGVs?

No single sensor is flawless—LiDAR trips on glass, cameras struggle in dim light, wheel encoders drift from slippage. Fusion covers these gaps, building redundancy so the robot always knows its spot and surroundings, even if one sensor flakes.

What is the difference between Loose and Tight coupling?

Loose coupling handles each sensor's data separately (e.g., GPS position + LiDAR position) before mixing. Tight coupling fuses raw inputs (e.g., GPS signals + LiDAR clouds) right in the filter. Tight is compute-heavier but delivers superior accuracy and toughness in tough spots.

Does sensor fusion increase the hardware cost significantly?

More sensors hike the BOM, but fusion can slash total costs. Fuse affordable, lower-spec sensors (cheap IMUs, standard cameras) to rival pricey high-end nav systems.

What computing power is required for real-time fusion?

It hinges on complexity. Basic EKF fusion for odometry flies on microcontrollers. But merging high-res 3D LiDAR clouds with 4K video for SLAM demands heavy hitters like NVIDIA Jetson or GPU-equipped industrial PCs.

How do you handle time synchronization between sensors?

Pinpoint timestamping is key. Systems use hardware triggers or PTP (Precision Time Protocol) for simultaneous captures. Software buffers align packets by timestamp before fusion, avoiding "ghosting" glitches.

What happens if one sensor provides garbage data?

Smart fusion algos run integrity checks (like Mahalanobis distance) to boot outliers. If a sensor strays far from predictions, it gets downweighted or ignored to shield the robot's path from bad data.

Is Kalman Filtering the only method used?

Kalman Filter (and EKF/UKF variants) rules state estimation, but alternatives thrive. Particle Filters shine for big-map localization (Monte Carlo style). Graph optimization and end-to-end deep learning are surging for tough SLAM jobs.

How often does the system need calibration?

Intrinsic calibration (lens/internal traits) is mostly one-and-done. Extrinsic (sensor positions) needs rechecks after collisions or big jolts. Cutting-edge systems offer "online calibration" to tweak dynamically on the job.

Can sensor fusion help with outdoor navigation?

Absolutely. Outdoors are chaotic and weather-prone. Fusing GNSS (GPS) with IMU and wheel odometry bridges satellite dropouts from buildings or trees, keeping precise position in "urban canyons."

What is the difference between low-level and high-level fusion?

Low-level fusion mixes raw data (e.g., pixel-blending thermal + RGB). High-level fuses *decisions* or *objects* from sensors (e.g., "Camera A sees a person" + "LiDAR B sees an obstacle" = "Confirmed Person"). High-level uses less bandwidth but can miss fine details.

How does IMU integration improve navigation?

IMUs run at blazing speeds (100Hz+) vs. LiDAR/cameras (10-30Hz). Fusion leverages IMU to plug gaps between visual frames, delivering smooth velocity and orientation for stable control loops.