RGB-D Cameras

Unlock real spatial smarts for your mobile robots: blend high-res color images with precise depth per pixel. RGB-D sensors help AGVs tackle tricky environments, spot objects, and work safely around humans.

Core Concepts

Depth Sensing

Unlike regular cameras, RGB-D sensors gauge distance to every pixel. That builds a depth map telling the robot exactly how far obstacles really are.

Point Clouds

Blending RGB and depth creates a 3D point cloud—vector data capturing your environment's geometry in real-time robot coordinates.

Sensor Fusion

RGB-D cameras fuse color textures right onto the depth shapes. So AI doesn't just spot "an object" at 2 meters—it IDs "a wooden pallet" exactly.

Structured Light

Lots of RGB-D cams project an invisible IR pattern on the scene, then figure depth from how it warps across object surfaces.

Time of Flight (ToF)

ToF sensors fire light pulses and clock the round-trip time. Delivers super-accurate depth, even as lighting shifts.

Visual SLAM

RGB-D data powers vSLAM (Simultaneous Localization and Mapping), letting robots map unknown spaces and track their spot inside them on the fly.

How It Works

"RGB-D" means channels for Red, Green, Blue, plus Depth. Standard cameras squash 3D reality into 2D; RGB-D keeps the Z-axis (distance) intact.

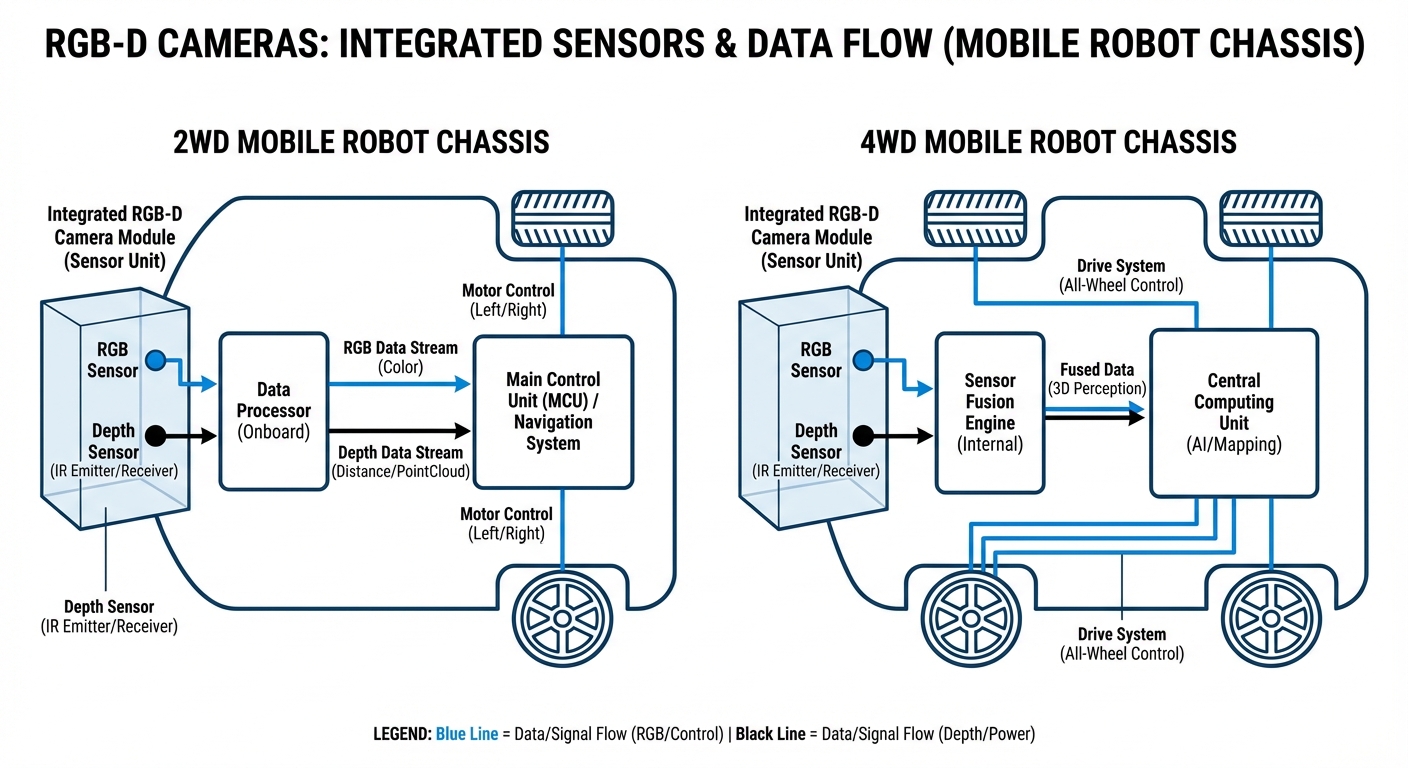

On a typical mobile robot, the RGB sensor grabs the environment's look—handy for operators and AI spotting stuff (like stop sign vs. red box). At the same time, the depth sensor (stereoscopy, structured light, or Time-of-Flight) whips up a grayscale heatmap where brighter means closer.

The onboard processor syncs these feeds, letting the nav stack drop obstacles into a local costmap. That way, AGVs dodge chairs or people in real time—not just leaning on a static old floor plan.

Real-World Applications

Dynamic Obstacle Avoidance

LiDAR sweeps a single 2D plane, often blind to overhangs like tables or low forklift forks. RGB-D gives full volumetric sensing to catch obstacles at any height, dodging hanging cables or shelves.

Pallet Pocket Detection

Autonomous forklifts lean on RGB-D to nail pallet pockets. Depth data checks orientation and pallet strength for hands-free pickup.

Semantic Scene Understanding

In packed warehouses, robots must tell a person (temporary block) from a wall (permanent). RGB-D feeds deep learning to classify objects and plan smarter paths.

Docking & High-Precision Alignment

When an AGV nears a charger or conveyor, GPS and wheel odometry fall short. RGB-D tracks markers or geometry for millimeter-perfect alignment.

Frequently Asked Questions

What’s the main difference between RGB-D cameras and LiDAR?

LiDAR fires laser pulses for precise, long-range 2D/3D maps, but it's pricey and color-free. RGB-D delivers dense 3D with color at lower cost—though shorter range and pickier about lighting.

How does lighting affect RGB-D performance?

It depends on the tech. Stereo needs good ambient light for textures; structured light hates direct sun washing out the IR pattern. ToF holds up best but can glitch on strong IR sources.

Can RGB-D cameras detect glass or transparent surfaces?

Nope, not usually. Most depth tech like IR projection or ToF just passes right through clear glass or bounces unpredictably off mirrors, creating 'holes' in the depth map. Mobile robots often rely on sonar or special software filters to safely handle glass walls.

What’s the typical range of an RGB-D camera for robotics?

Most off-the-shelf RGB-D cameras, like Intel RealSense or OAK-D, shine in close-up work, handling distances from about 0.2 meters out to 5 or 10 meters. Past that, depth accuracy drops off sharply compared to LiDAR.

Do I need a GPU to process RGB-D data?

Crunching 3D point clouds is heavy on the computing power. Simple obstacle dodging can manage on a regular CPU, but for stuff like semantic segmentation, object detection, or dense vSLAM, you’ll want an onboard GPU—think NVIDIA Jetson—for smooth real-time action.

What’s the difference between Structured Light and Stereoscopic depth?

Stereoscopic vision uses two lenses, just like our eyes, and it’s great outdoors but needs textured surfaces to work well. Structured Light, on the other hand, projects a known pattern onto the scene for depth calculation—it’s super precise on blank walls but hates bright sunlight.

How are RGB-D cameras calibrated?

They need both intrinsic calibration (like lens distortion and focal length) and extrinsic calibration (its position relative to the robot’s base). Many come pre-calibrated from the factory, but for tough AGV fleets, regular re-calibration keeps the depth map perfectly synced with the robot’s motion model.

Can you use multiple RGB-D cameras on one robot?

Absolutely, multi-camera setups are super common for full 360-degree coverage. The catch? Interference if they all blast IR patterns at once. Fix it with sync cables or by timing their emitters to fire one after another.

What bandwidth interfaces are required?

RGB-D streams pump out tons of data. You’ll need USB 3.0 or Gigabit Ethernet (GigE) to keep up. USB 2.0 just can’t handle high-res color and depth at solid frame rates like 30fps or more.

How does "Blind Spot" affect AGV safety?

Every camera has a closest sensing range—like 20cm. Anything nearer, and it’s blind. Smart engineers tuck the camera deeper into the chassis or pair it with ultrasonic sensors or bumpers for that super-close zone.

Are RGB-D cameras durable enough for industrial environments?

Consumer sensors might crap out from vibes or dust. Industrial RGB-D cams come with IP65/67 enclosures, screw-down cables (like GMSL or Ethernet), and rugged mounts built for warehouse AGVs and forklifts.

Can RGB-D replace safety scanners (Safety LiDAR)?

Not typically for PL-d/Category 3 safety certs. AI vision’s getting better, but certified Safety LiDARs are predictable and fail-safe. RGB-D handles navigation and basic dodging, while Safety LiDAR is your last-resort emergency brake.