Point Cloud Processing

Unlock 3D navigation for your autonomous fleet. Turn raw LiDAR and depth data into sharp, drivable 3D maps so AGVs spot obstacles, recognize items, and thrive in busy, changing spaces.

Core Concepts

Voxelization

Voxelization downsamples giant point clouds into 3D cube grids (voxels). It slashes compute needs while keeping key shapes intact for smooth navigation.

Segmentation

Smartly grouping points into distinct clusters. For AGVs, it's crucial to tell the "drivable floor" apart from walls, pallets, and moving obstacles.

Registration (ICP)

Iterative Closest Point algorithms line up multiple scans into one unified coordinate system. This lets the robot build a reliable map as it roams the warehouse.

Noise Filtering

Wiping out outliers and those tricky "ghost points" from dust, shiny surfaces, or sensor glitches. Clean data keeps the AGV from hitting the brakes on fake obstacles.

Feature Extraction

Spotting geometric shapes like planes, cylinders, or edges in the point cloud. This helps robots recognize key landmarks, like racking columns or charging stations.

KD-Trees

A smart space-partitioning structure that organizes points efficiently. It supercharges nearest-neighbor searches, which are vital for real-time collision avoidance.

How It Works

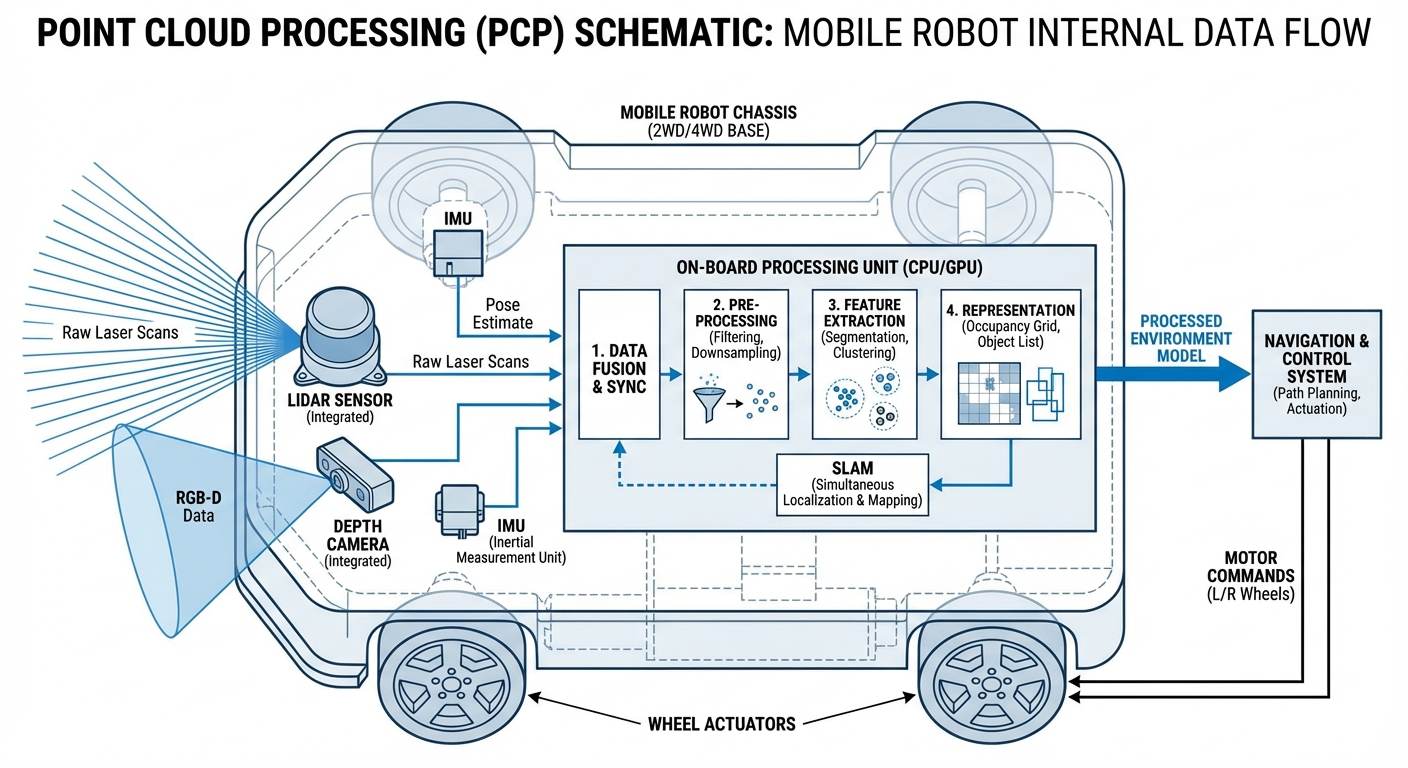

Point cloud processing kicks off with from LiDAR sensors or stereo cameras. These sensors blast laser pulses or snap images from different angles to measure distances to every surface around, cranking out millions of X, Y, Z coordinates per second.

Next up, the raw data gets . We downsample to lighten the load and filter out noise. Then segmentation algorithms split the ground plane from obstacles, readying clean data for action.

Finally, the polished point cloud powers . Matching live scans to a stored map gives the AGV pinpoint position accuracy down to centimeters, enabling safe path planning around fixed and moving objects.

Real-World Applications

High-Bay Warehouse Logistics

AGVs tap into point clouds to spot pallet pockets way up at 10+ meters. Pinpointing the pallet's exact plane and angle lets forklifts grab loads safely—no hands needed.

Outdoor Navigation

Unlike flat 2D laser scanners, 3D point cloud processing lets mobile robots handle bumpy terrain, spot curbs, and dodge overhanging stuff like tree branches in outdoor yards.

Automated Trailer Loading

Robots scan inside semi-trailers to figure out available space. Point cloud data helps calculate the best pallet spots to max out the fill rate.

Dynamic Safety Zones

Forget fixed safety fields—point cloud processing brings "volumetric safety." Robots can tell a human worker (time to stop) from a dangling curtain or dust speck (keep going).

Frequently Asked Questions

What’s the difference between 2D LiDAR and 3D point cloud processing?

2D LiDAR just scans a single horizontal slice, giving a flat map. 3D point cloud processing uses sensors that capture multiple layers or the full view, building a true 3D model. This lets AGVs detect obstacles below or above the scan line, like table legs or overhanging shelves that 2D might overlook.

Does point cloud processing need a GPU on the robot?

For high-res, real-time work, a GPU like NVIDIA Jetson is a game-changer for parallel tasks like filtering and segmentation. But basic ops on downsampled, voxelized data can run fine on beefy multi-core CPUs in standard industrial PCs.

How does the system handle transparent or reflective surfaces?

Transparent stuff like glass or super-reflective surfaces like mirrors and polished metal trip up LiDAR, creating "holes" or stray points. Smart pipelines use intensity filters and multi-echo data to catch these, or blend in sonar/ultrasonic sensors that ignore light tricks.

What’s voxel grid filtering, and why use it?

Voxel grid filtering downsamples by chopping space into 3D cubes (voxels) and replacing all points in each with their average center. It slashes data size—from 100,000 to 5,000 points—while keeping the environment's shape intact, making real-time navigation possible.

Can point cloud data be used for semantic understanding?

Yes. Deep learning models like PointNet on point clouds let robots not just detect objects but classify them too. They can tell a "human," "forklift," or "wall" apart, applying smart rules—like slowing for people but stopping dead for walls.

What software libraries are commonly used for this?

The Point Cloud Library (PCL) is the go-to open-source tool for 2D/3D image and point cloud processing. Open3D is rising fast with its Python-friendly vibe and simplicity. ROS (Robot Operating System) wraps these libraries seamlessly too.

How much storage does a point cloud map need?

Raw point clouds are data hogs (hundreds of MBs per second). For maps, we convert to efficient formats like Octomaps or keyframe scans. A 10,000 sq meter warehouse map might just take a few hundred MBs on the robot's drive once optimized.

How does latency affect high-speed AGVs?

Latency matters big time. At 200ms processing delay and 2m/s speed, the robot travels 0.4m before reacting. Engineers counter with predictive controls for delays or FPGAs for instant safety checks.

What happens if the environment changes drastically?

This is the classic "kidnapped robot problem" or map drift. Point cloud localization handles small shifts like moved boxes fine. For big changes like rearranged racking, robots use ongoing mapping or "long-term SLAM" to update maps on the fly.

Is it better to use RGB-D cameras or LiDAR?

LiDAR shines with long range (100m+) and accuracy in any light, perfect for main navigation. RGB-D cameras give colorful, dense clouds but cap at <10m and falter in bright sun. Top AGVs fuse both for the best of everything.

How is ground segmentation performed on slopes or ramps?

Basic plane fitting like RANSAC flops on ramps. Smarter methods estimate surface normals or check connectivity. They scan angle changes between points: gradual means ramp or floor; steep (near 90°) screams obstacle.

Does point cloud processing increase battery consumption?

Yes. 3D LiDAR guzzles more power than 2D, and crunching 3D data drains batteries faster. But smoother paths, fewer stops, and quicker speeds from 3D usually more than cover the hardware's power draw.