ORB-SLAM

A flexible, spot-on visual SLAM setup that lets AGVs tackle tough spaces without pricey LiDAR. Get killer localization and sparse mapping from basic cameras.

Core Concepts

Feature Extraction

Relies on ORB (Oriented FAST and Rotated BRIEF) features to spot unique image points. These binary gems are fast, rotation-proof, and scale-invariant.

Local Mapping

Builds a local map of what's nearby by triangulating ORB features from fresh keyframes. Runs parallel to tracking for real-time speed.

Loop Closure

Catches when the AGV revisits old turf. Vital for fixing odometry drift and keeping the global map solid.

Pose Estimation

Pins down the robot's exact spot and pose (6-DoF) by matching live features to the local map for dead-on path tracking.

Bundle Adjustment

Graph optimization (g2o) minimizes reprojection errors, polishing camera poses and 3D points together for top precision.

Keyframe Selection

Smartly picks key frames to store, ditching extras. Keeps memory light for marathon runs.

How It Works

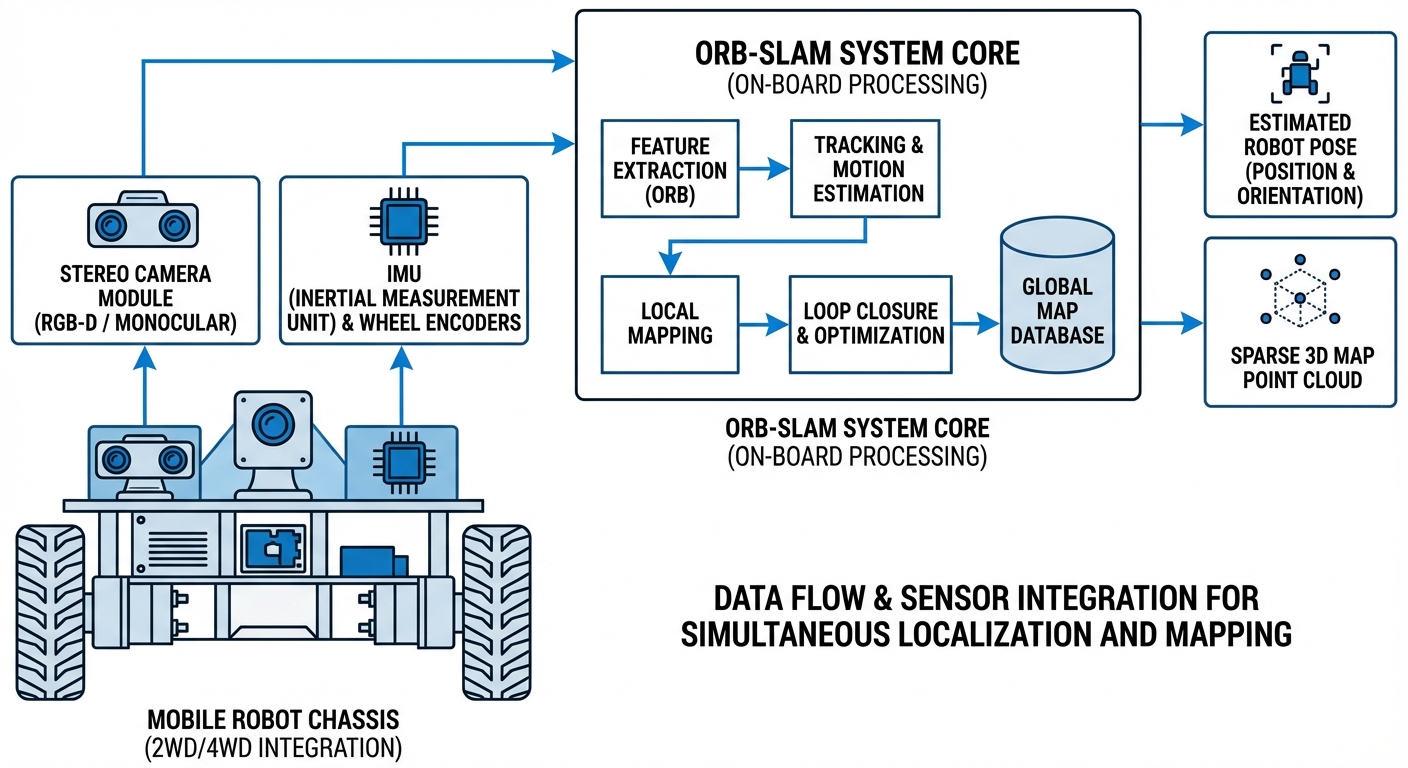

ORB-SLAM uses a clever multi-threaded setup that splits tracking, local mapping, and loop closing into separate threads. At its heart, it processes video from monocular, stereo, or RGB-D cameras to pull out ORB features—those high-contrast corners in the image, captured as binary vectors.

As your AGV moves along, the system tracks these features from frame to frame to estimate speed and orientation. At the same time, a dedicated thread builds a sparse 3D point cloud of the surroundings, picking out keyframes that offer fresh, valuable info worth keeping.

Spotting when the robot returns to a familiar area, the loop closing thread uses a bag-of-words method to recognize the scene. It then corrects the entire map to perfectly align the current position with past data, wiping out the drift that often trips up wheel-encoder navigation.

Real-World Applications

GPS-Denied Warehousing

Perfect for indoor forklifts and AGVs in vast warehouses where GPS can't reach. ORB-SLAM delivers centimeter-level accuracy using budget-friendly cameras instead of costly laser scanners.

Hospital Service Robots

Delivery and disinfection robots lean on ORB-SLAM to navigate tight corridors and ever-changing spaces. Its visual approach excels at picking out landmarks in sterile settings with scarce geometric features.

Drone Inspection

UAVs inspecting infrastructure inside tanks, tunnels, or under bridges rely on ORB-SLAM for stable flight and mapping when other positioning systems give out.

Last-Mile Delivery

Sidewalk robots tap into stereo ORB-SLAM to measure depths, sidestep pedestrians, and build reusable maps of urban neighborhoods for repeat delivery routes.

Frequently Asked Questions

What is the difference between ORB-SLAM2 and ORB-SLAM3?

ORB-SLAM3 succeeds ORB-SLAM2 by adding Visual-Inertial SLAM (VI-SLAM). It fuses IMU data tightly with visuals for superior robustness in fast motion or low-texture scenarios where cameras alone falter. It also supports multi-map merging.

Does ORB-SLAM require a Stereo Camera or can it use Monocular?

It handles monocular, stereo, and RGB-D setups. That said, monocular SLAM deals with scale ambiguity (no clue on real object sizes). For AGVs needing spot-on metric navigation, stereo or RGB-D is best to grab true-world scale effortlessly.

How does ORB-SLAM compare to LiDAR-based SLAM (e.g., GMapping)?

LiDAR SLAM shines in geometric precision and lighting immunity, but demands pricey hardware. ORB-SLAM, as Visual SLAM, is far more affordable and offers deeper scene understanding, though it's pickier about lighting and blank walls.

Is ORB-SLAM computationally expensive for embedded hardware?

It's efficient thanks to binary features, but still pretty CPU-hungry. Real-time on a Raspberry Pi is tricky; go for an NVIDIA Jetson, Intel NUC, or similar edge AI box for smooth AGV performance.

How does it handle dynamic environments (moving people/forklifts)?

Classic ORB-SLAM expects a static world. It rejects outliers to shrug off small movers, but heavy action like a packed warehouse aisle can throw off tracking. That's when pairing it with dynamic object masking shines.

What happens if the robot gets "Lost"?

ORB-SLAM includes relocalization. Lose tracking (camera blocked, lights out)? It shifts to relocalization mode. Spot a mapped area with familiar features, and it snaps the robot's pose right back onto the map.

Can I save and reuse the map generated by ORB-SLAM?

Absolutely. The sparse map—point cloud and keyframes—saves easily to disk. Next day, your robot loads it up and localizes instantly, no need to remap from zero.

Is there ROS (Robot Operating System) integration?

Yes, robust ROS and ROS2 wrappers exist for ORB-SLAM. They slot seamlessly into robotics stacks, pumping out Pose and Odometry topics for nav systems like Nav2.

What kind of camera features are ideal for ORB-SLAM?

Global shutter cameras beat rolling shutter ones hands-down to dodge motion distortion. A wide FOV helps too, keeping features visible longer for steadier tracking.

What are the limitations in low-texture environments?

ORB-SLAM thrives on corners and standout points. Plain white walls or repeating floors? It struggles for features. Visual-Inertial setups or adding fiducials like AprilTags save the day.

Is ORB-SLAM open source for commercial use?

ORB-SLAM2 and ORB-SLAM3 come under GPLv3. Distribute software with it? You open-source yours too. For closed commercial products, chat with the authors about a custom license.

How dense is the map created by ORB-SLAM?

ORB-SLAM builds sparse maps—just key feature points, not dense 3D meshes. Killer for localization, but pair it with a depth camera or sonar for obstacle dodging, since the cloud alone won't cut it.

Ready to implement ORB-SLAM in your fleet?

Our AGV platforms arrive pre-loaded with cutting-edge visual SLAM, slashing your dev time from months to mere days.

Explore Our Robots