Optical Flow

Give your AGVs the power to gauge speed and direction just by looking. Optical flow builds a tough navigation layer that fixes wheel-slip glitches and boosts localization without GPS.

Core Concepts

Pixel Motion Vectors

It figures out the motion of brightness patterns between two back-to-back image frames to get velocity vectors $(u, v)$ for every pixel or feature.

Ego-Motion Estimation

It separates the robot's own movement from stuff moving in the scene, delivering spot-on relative positioning without any external beacons.

Sparse vs. Dense Flow

Robots can track key feature points (Sparse/Lucas-Kanade) for quick speed reads, or scan the whole image (Dense/Farneback) for richer detail—at a higher compute cost.

Brightness Constancy

It assumes a pixel's brightness on an object stays steady as it moves, letting math track where it shifts in the next frame.

Sensor Fusion

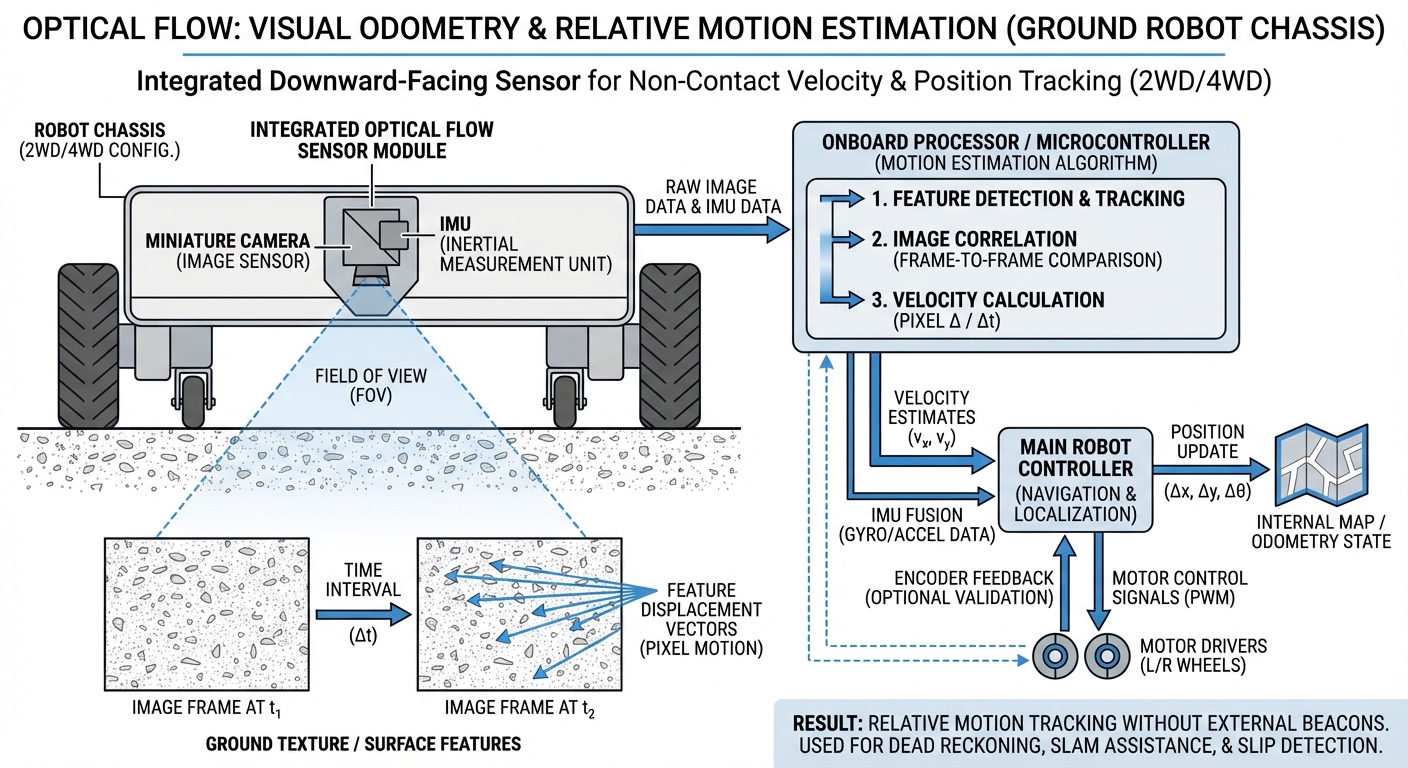

Often paired with IMU (Inertial Measurement Unit) data to tweak for rotation effects, zeroing in on pure forward motion for better odometry.

Time-to-Contact (TTC)

By checking the flow field's expansion rate (divergence), the robot gauges approach speed to a surface—no depth map needed.

How It Works

Optical flow sensors are like "visual odometers." Unlike wheel encoders that just count wheel spins, optical flow tracks real floor texture movement against the camera. No more slip errors on slick concrete or bumpy ground.

The setup usually uses a downward-facing camera grabbing images super fast. An algorithm (on edge processor or FPGA) compares Frame A to B, spotting texture shifts $(dx, dy)$ in pixels.

With the camera's height and focal length known, that pixel shift turns into real-world speed in meters/second. It feeds straight into the localization stack (like Kalman Filter) for live position tweaks.

Real-World Applications

Warehouse Logistics

AGVs on shiny concrete often micro-slip during starts. Optical flow links wheel odometry to LiDAR localization, stopping "drift" between landmarks.

Precision Docking

When an AMR docks to a charger or conveyor needing millimeter accuracy, wheel encoders fall short. Optical flow delivers the precise velocity control for that final nudge.

Outdoor Agriculture

In ag robots, mud and dirt mean constant wheel slip. Optical flow locks onto ground texture for true heading and speed, no matter the grip.

Cleaning Robots

Industrial scrubbers wet the floor they roll over, messing with friction. Visual odometry via optical flow keeps the cleaning map dead-on, even on slick spots.

Frequently Asked Questions

What is the main advantage of Optical Flow over Wheel Odometry?

The big win is no wheel slip issues. Wheel odometry guesses from rotations, so spins on wet or carpet fool it into fake movement. Optical flow measures true ground travel—real velocity data, traction be damned.

Does Optical Flow work in the dark?

Generally, no. Standard cameras need ambient light for texture. But most industrial optical flow sensors pack an IR LED illuminator, letting them shine in total dark by making their own light.

Does it require a textured surface?

Yes. Optical flow tracks texture patterns. Super glossy or blank surfaces (like a spotless whiteboard or glass) trigger the "Aperture Problem," killing motion detection. Warehouses usually have scuffs, dust, and concrete grit for plenty of texture.

How computationally expensive is it?

Dense optical flow taxes a main CPU. But robotics today uses dedicated sensors (like PMW3901) or GPU/FPGA accel to crunch it at the edge, just piping slim velocity data to navigation.

Can Optical Flow replace LiDAR?

No, different jobs. LiDAR handles mapping, obstacle dodging, and global position ( on the map). Optical flow does odometry ( and you moved). Fuse 'em for the win.

What is the typical operating height?

It depends on the lens focal length. Typical "mouse sensor" optical flow chips shine from 8cm to 30cm off the floor. Custom camera optics can handle way higher, like drones at 50m, if texture's visible.

Does camera vibration affect accuracy?

Yes, big time. Vibration adds blur or fake motion. Soft-mount the sensors, or fuse with gyro (IMU) to strip out rotation vibes from true forward speed.

What is the "Aperture Problem"?

This happens viewing a moving edge in a tiny window (aperture). Spot a vertical line sliding—you can't tell side-to-side or diagonal. Robot algos fix it by chasing corners (2D gradients) over plain edges.

Is calibration required?

Yes. It needs exact camera-to-floor distance and lens specs. Height shifts (like suspension squish) drift the velocity scale. Fancy setups add a ToF sensor to auto-scale flow on the fly.

How does it handle dynamic shadows?

Shadows sliding over the floor look like movement, causing glitches. Lucas-Kanade types are prone, but tougher modern methods plus controlled lights (onboard LEDs, block outside light) tame shadow noise.