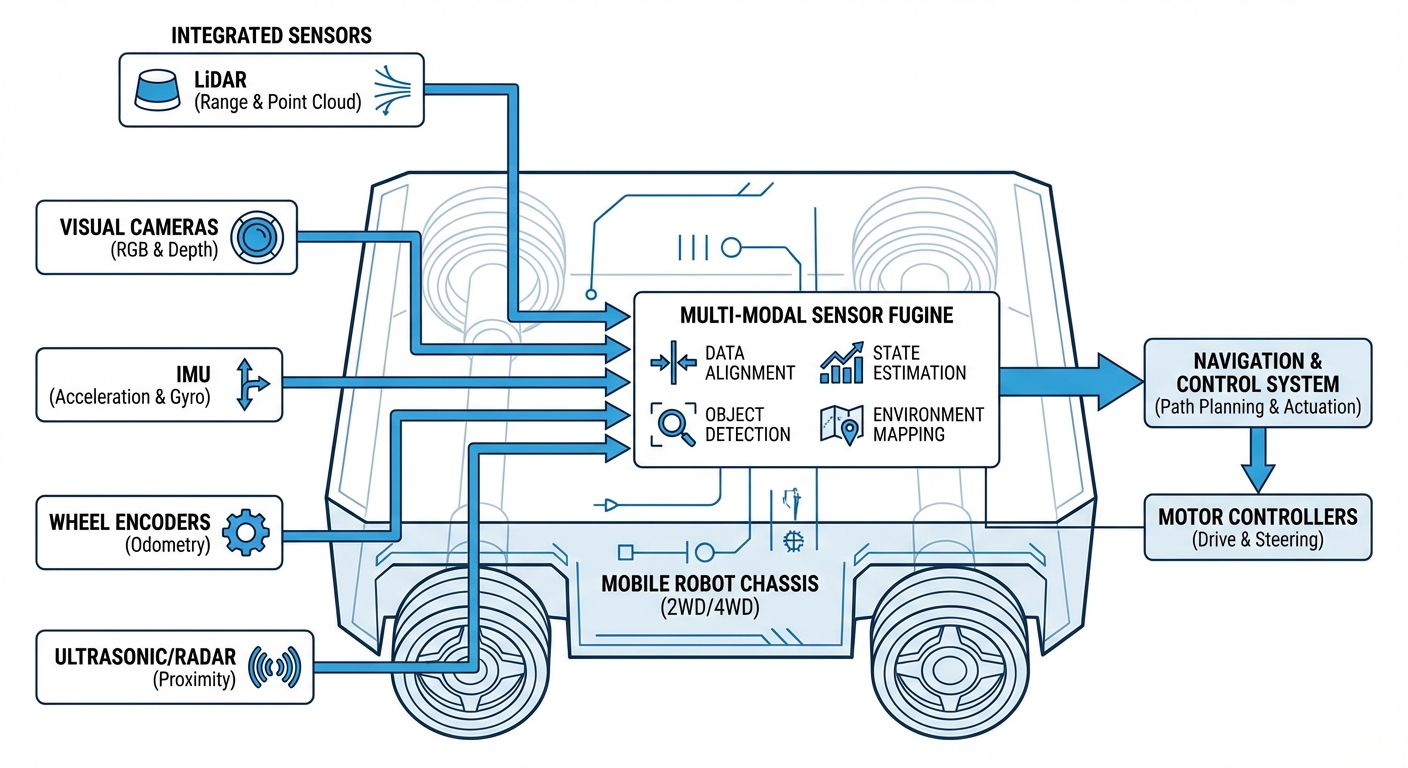

Multi-Modal Sensor Fusion

Unlock top-tier navigation and safety for your autonomous mobile robots by mathematically blending data from LiDAR, cameras, IMUs, and odometry. You'll create a unified, super-reliable environment view that beats any single sensor hands down.

Core Concepts

Sensor Redundancy

Keeps your system rock-solid even if a sensor craps out. If glare blinds the camera, LiDAR keeps mapping obstacles with pinpoint accuracy.

Complementary Data

Plays to each sensor's physics strengths. Cameras deliver semantic color info for signs, while radar nails depth through fog or dust.

State Estimation (EKF)

Harnesses algorithms like Extended Kalman Filters to predict the robot's position, smartly weighting sensor inputs by their noise levels.

Temporal Synchronization

Syncs data from all sources down to the exact millisecond, banishing "ghosting" glitches during high-speed AGV runs.

Spatial Calibration

Pins down the exact geometric links (extrinsic parameters) between sensors, letting software fuse 3D point clouds with 2D images seamlessly.

Semantic Mapping

Goes way beyond geometry. Fusing ML vision with depth data helps the robot distinguish "this is a human" from "this is a pallet," tweaking safety behavior on the spot.

The Fusion Pipeline

In a typical mobile robotics stack, sensor fusion happens at two key levels: Early Fusion and Late Fusion. It all starts with rock-tight time-syncing of raw data streams.

Low-Level Fusion:

High-Level Perception:

Real-World Applications

Intralogistics & Warehousing

AMRs lean on fusion to weave through dynamic aisles. When lights or shiny surfaces block vision, LiDAR holds localization steady to within 1cm.

Outdoor Delivery Robots

Sidewalk robots battle rain, snow, and harsh sun. Fusion lets them ignore rain splatters (flagged by LiDAR) by cross-checking radar data.

Healthcare Environments

Hospital robots tackle crowded, glass-filled halls. Ultrasonics catch glass doors LiDAR misses, while cameras spot patients who get right-of-way.

Heavy Industry & Mining

In dusty hellscapes where cameras fail, millimeter-wave radar and high-powered LiDAR keep autonomous trucks humming safely 24/7.

Frequently Asked Questions

Why does sensor fusion matter for AGVs over just LiDAR?

LiDAR nails distances but misses context and chokes on glass or black surfaces. Fusion adds cameras for recognition and sonars for nearby transparencies, erasing single-sensor blind spots.

How does multi-modal fusion hit the robot's battery and compute?

It ramps up compute big time, often needing a dedicated GPU or TPU (like NVIDIA Jetson) for real-time data crunching. But optimized edge hardware handles it, and the safety wins—fewer stops and retries—more than offset the power bump.

What's the difference between loose and tight coupling in fusion?

Loose coupling crunches sensor data separately (like GPS position plus IMU position) before blending. Tight coupling mixes raw feeds (GPS pseudoranges right into IMU accelerations)—tougher math, but way more resilient when signals like GPS partially drop.

How do you calibrate a camera and LiDAR?

Extrinsic calibration nails the rotation and translation matrix between sensor frames. It's usually done with a checkerboard both see. Advanced setups use online calibration to tweak for vibes or heat shifts on the fly.

What happens if sensors give conflicting data?

The fusion algorithm (usually Kalman Filter or Bayesian Network) uses covariance matrices to gauge trust. LiDAR super sure about an obstacle but camera's blurry? Prioritize LiDAR. Safety always picks the cautious stop in big conflicts.

Is 3D LiDAR a must, or does 2D cut it for fusion?

For flat warehouses, 2D LiDAR with wheel odometry is the go-to standard—super cost-effective. But outdoors or with overhangs like shelves, 3D LiDAR rules for full 3D maps and dodging stuff above or below the scan plane.

How does sensor fusion fix the "Kidnapped Robot" problem?

If the robot gets physically relocated (no wheel odometry update), fusion bounces back by matching live LiDAR scans or camera landmarks to the global map (AMCL or SLAM), letting it "wake up" and relocalize in a flash.

What's AI's role in modern sensor fusion?

AI's powering "semantic fusion" more and more. Deep Neural Networks (DNNs) devour raw camera and radar data together to output object types and paths, often crushing traditional filters in messy, unstructured spots.

How do you sync timestamps across USB, Ethernet, and CAN bus sensors?

System protocols like PTP (Precision Time Protocol) or NTP over Ethernet are standard. For hardware sync, industrial sensors take PPS triggers to capture the world at the exact same microsecond—crucial for fast movers.

Does sensor fusion eliminate the need for safety bumpers?

No. Regs demand physical safety bumpers or PL-d/PL-e laser scanners (ISO 3691-4). Fusion handles navigation and dodging for efficiency, while certified safety gear delivers the ultimate hard-stop backup.

Can sensor fusion supercharge SLAM (Simultaneous Localization and Mapping)?

Absolutely. Visual-LiDAR SLAM leads the pack. Fusing LiDAR's geometric precision with visual SLAM's loop-closure (camera spotting prior places) creates drift-free, consistent maps over huge areas.

What's the cost of a full multi-modal stack?

It bumps the Bill of Materials (BOM) with extra sensors and compute. But for autonomous forklifts or high-value gear, ROI hits fast via more uptime (fewer jams), bolder speeds (confident planning), and slashed accident risks.