Loop Closure Detection

Ditch drift in your AGV nav by spotting old haunts. Loop closure is SLAM's secret sauce, turning wobbly paths into pinpoint global maps for killer autonomy.

Core Concepts

Drift Correction

Fixes error pileup from wheels and IMUs. No loop closure? Position drifts forever.

Place Recognition

It matches current scene—via visual Bag of Words or LiDAR scans—to the history database.

Pose Graph Optimization

When the system detects a loop, it adds a constraint edge to the pose graph. Then, optimization tools like g2o or GTSAM spread the error evenly across the whole trajectory.

Perceptual Aliasing

Tackles the tricky issue where two different spots look identical—like matching warehouse aisles. That's why we rely on solid geometric verification to avoid false loop closures.

Global Consistency

Makes sure the map is topologically spot-on. Start and end points line up perfectly, paving the way for dependable long-term path planning and navigation.

Multi-Session SLAM

Lets robots blend maps from different sessions. A robot can wake up, figure out where it is from past maps, and pick right back up without missing a beat.

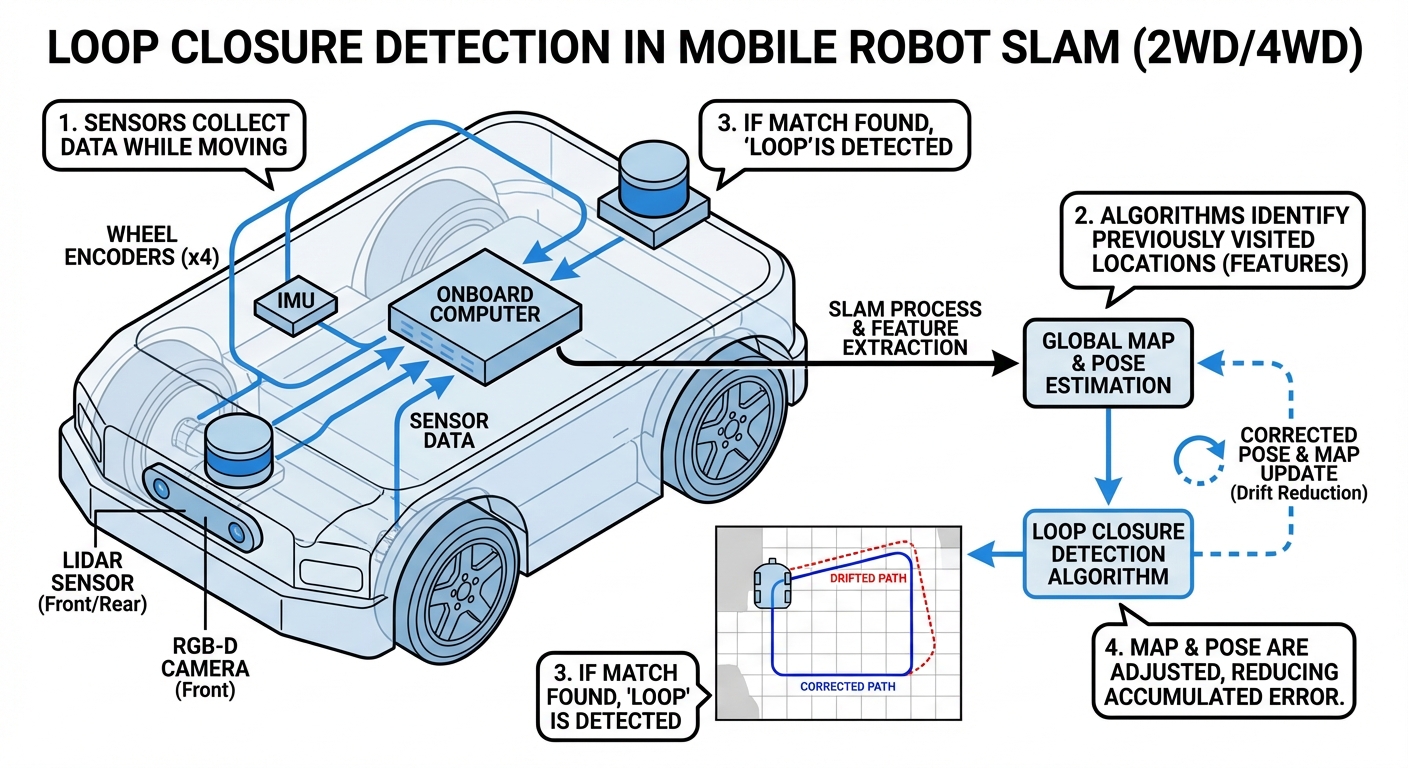

How It Works

Loop closure detection runs quietly in the background of the SLAM process. As the AGV rolls along, it keeps scanning with LiDAR or stereo cameras, pulling out key geometric features or visual descriptors.

These get boiled down into a simple signature and checked against a database of places the robot has visited before. Spot a strong match? The system figures out the exact transformation between now and then.

Finally, it adds a loop constraint to the pose graph. A backend optimizer minimizes the overall error, snapping the current path back to the known map and wiping out drift in an instant.

Real-World Applications

Large-Scale Warehousing

In huge spaces over 100,000 sq ft, wheel slip leads to major odometry drift. Loop closure resets position errors every time they hit a familiar intersection or charging spot.

Dynamic Factory Floors

Factories shift layouts often with moving pallets and people. Tough loop closure lets robots relocalize even if 60% of the visual features are blocked or relocated.

Hospital Logistics

Hospitals have endless, identical corridors (hello, perceptual aliasing). Smart loop closure picks out unique landmarks like nurses' stations or artwork to tell floors and wings apart.

Outdoor Patrol Robots

GPS drops out around tall buildings or trees. Loop closure keeps outdoor security robots on track by recognizing gates or building corners.

Frequently Asked Questions

What's the difference between Loop Closure and Local Localization?

Local localization—like odometry or scan matching—tracks movement step by step from the last frame, but errors build up over time. Loop closure is the big-picture fix: it spots a long-ago place, measures the total error, and corrects the entire map history to kill that drift.

How does the system handle "False Positive" loop closures?

False positives can wreck your map. We use geometric checks (like RANSAC) to confirm scenes match physically, plus temporal checks to make sure the match holds over multiple frames before locking it in.

Does Loop Closure work in changing lighting conditions?

Visual SLAM can falter with lighting shifts, but modern descriptors like ORB or AI-trained ones handle brightness changes well. LiDAR loop closure? It's lighting-proof, perfect for round-the-clock ops in any light.

What is "Perceptual Aliasing" and why is it a risk?

Perceptual aliasing is when two different places fool the sensors into thinking they're the same—like duplicate warehouse aisles. Close the wrong loop, and your map folds in on itself. We stop that with covariance checks and graph tests.

How computationally expensive is Loop Closure Detection?

Scanning every past frame would be a slog. Instead, we use Bag-of-Words for speedy lookups and narrow it to keyframes near the robot's rough position—keeping it real-time on onboard hardware.

Can Loop Closure help if the robot is "kidnapped"?

Yes, that's global relocalization. Kidnapped robot? No local track? Loop closure scans globally, IDs the spot from sensors alone, and snaps it right back onto the map.

Which sensors are best for Loop Closure: LiDAR or Camera?

Cameras deliver rich details, great for telling apart geometrically similar spots with unique signs or colors. LiDAR nails precise shapes, ideal for big structures. Fusing them? Best of both worlds.

What happens to the map when a loop is closed?

A loop closes, and boom—graph optimization (like Levenberg-Marquardt) kicks in. It spreads the built-up error along the full trajectory, shifting and aligning the map to close gaps and fix walls and obstacles.

Does Loop Closure work in highly dynamic environments?

Dynamic spots with people or forklifts are tough. Smart algorithms strip out moving stuff from scans or images, focusing on static heroes like walls, pillars, and racks for solid recognition.

What is Bag-of-Words (BoW) in this context?

Bag-of-Words borrows from text search. It turns image features into a 'vocabulary' of numbers, letting you blitz-compare the current view to thousands of past ones by matching shared 'words' (features).

How frequently should loop closure be attempted?

It usually happens on 'keyframes' picked by distance or rotation (say, every 1 meter or 20 degrees). Doing it every frame? Wasteful—drift builds slowly anyway.

Can Loop Closure fail?

Yes. In super-bland environments like endless blank halls or loop-free paths, detection fails. Then it's all on precise local odometry.