LiDAR SLAM

Simultaneous Localization and Mapping lets Autonomous Guided Vehicles (AGVs) roam tricky spaces without tracks or wires. LiDAR SLAM builds a map from laser data while pinpointing position—delivering pinpoint, centimeter-level accuracy for cutting-edge automation.

Core Concepts

Point Clouds

The raw laser data from the sensor. Thousands of pulses per second build a thick cloud of points mapping the robot's close-up world.

Pose Estimation

The algorithm figures the robot's spot (x, y) and heading (theta) on the map by spotting shifts in scans over time.

Scan Matching

Key step: matching the latest LiDAR scan to past ones or the full map (via ICP or NDT) to track motion.

Loop Closure

Spotting a familiar spot you've been before. It 'closes the loop,' fixing drift errors in the map.

Occupancy Grid

The final map: usually a 2D grid where cells score the odds of space being open, blocked, or unknown.

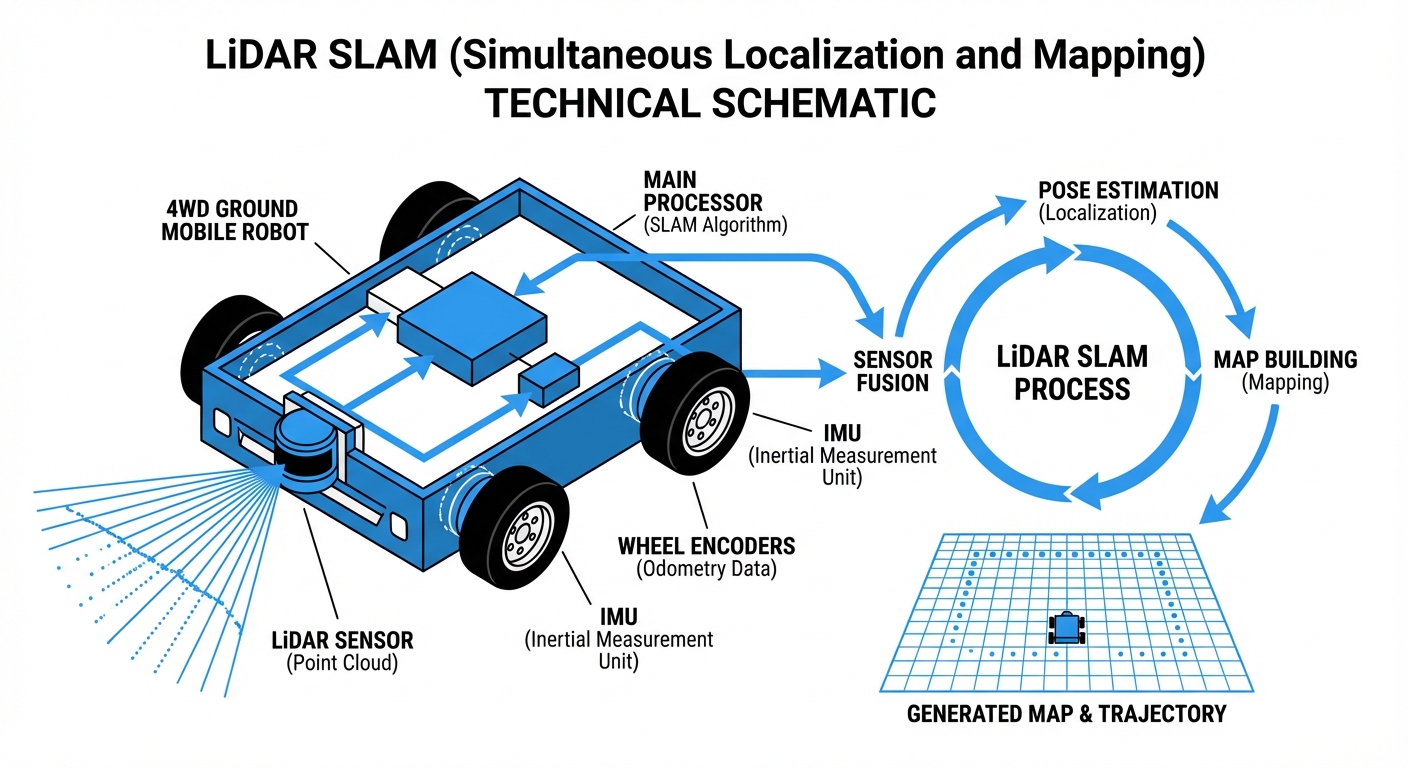

Sensor Fusion

LiDAR rarely works alone. SLAM algorithms blend it with wheel odometry and IMU data to stay accurate even during fast maneuvers.

How It Works

SLAM starts with the LiDAR sensor firing off pulses of light into the surroundings. These bounce back from walls, racks, machines—whatever's there—and return to the sensor. By measuring the time of flight (ToF), the robot pins down exact distances to points all around in a full 360-degree sweep.

As the AGV moves, SLAM compares the fresh scan to the last one (scan matching). It hunts for matching shapes and features to figure out how far and which way the robot's gone. This turns those local readings into a solid global position.

At the same time, the robot refreshes its internal map. New obstacle? Added to the occupancy grid. Old one gone, like a shifted pallet? Map updates instantly, keeping navigation flexible in busy, changing factories.

Real-World Applications

Warehouse Intralogistics

AMRs use SLAM to weave through lively aisles with moving pallets and workers, making goods-to-person picking smooth and efficient—no magnetic tapes required.

Manufacturing Assembly

LiDAR tuggers deliver parts straight to assembly lines. SLAM lets them reroute on a dime around forklifts or machines, avoiding any production halts.

Healthcare Delivery

Hospital robots lean on precise SLAM to navigate tight corridors and elevators, hauling linens, meds, and meals while steering clear of patients and delicate equipment.

Hazardous Inspection

In risky spots like chemical plants or nuclear facilities, SLAM helps robots map the area and run inspections independently with top reliability.

Frequently Asked Questions

What is the difference between SLAM and Laser Guidance (LGV)?

Laser Guidance usually needs reflective targets installed on walls or columns for triangulation. SLAM relies on natural features like walls, racks, and machines—no infrastructure changes, so it's faster and more flexible to deploy.

How does LiDAR SLAM handle dynamic environments with moving people?

Smart SLAM algorithms filter out short-lived clutter. By tracking object consistency over time, it flags moving things like people or forklifts as temporary obstacles—not fixed map elements—so the robot can dodge them without messing up the map.

Does LiDAR SLAM work in complete darkness?

Yes. LiDAR is an active sensor that generates its own light with laser pulses, so it's totally independent of room lighting. It shines in pitch-black warehouses or dim factory floors, unlike visual SLAM cameras.

What happens if the environment changes significantly (e.g., layout change)?

Small shifts like moved pallets get handled on the fly. But big structural tweaks, like relocated walls or racking, typically need a full remap or partial update. Modern fleet software often supports stitching just the changed areas.

Can LiDAR SLAM detect glass or transparent surfaces?

It's a known quirk. Lasers slip through glass or bounce oddly off mirrors. To counter this, folks often pair LiDAR with sonar or ultrasonics, or add "virtual walls" on the digital map to block approaches to glassy areas.

What is the typical localization accuracy of 2D LiDAR SLAM?

In a typical industrial setup with enough fixed features, 2D LiDAR SLAM hits ±1cm to ±5cm accuracy. That's tight enough for docking, charging, and pallet ops.

How does the "Kidnapped Robot Problem" apply here?

If you pick up a powered-off robot and move it, it wakes up lost. Modern SLAM taps global localization tricks like Monte Carlo Localization to match the current scan against the full map and quickly rediscover its spot.

Is 3D LiDAR necessary, or is 2D sufficient?

For flat indoor floors like warehouses, 2D LiDAR is the go-to—cheaper and less compute-hungry. 3D LiDAR shines for outdoors, ramps, or spots with overhangs that 2D scans might miss.

How computationally expensive is LiDAR SLAM?

It's moderately demanding. Easier than crunching HD video for visual SLAM, but you still need a beefy industrial PC or embedded board like NVIDIA Jetson or Raspberry Pi 4+ for real-time scan matching and planning at 20Hz+.

Can LiDAR SLAM work in long, featureless corridors?

Long corridors cause 'geometric degeneracy'—scans look identical as you go straight. SLAM bridges this by leaning on wheel odometry and IMU until unique features pop up again.

What maintenance does the LiDAR sensor require?

Maintenance is light but essential. Keep the lens free of dust and oil to avoid fake 'ghost' obstacles. Mechanical LiDARs have parts that wear out over time, but solid-state ones need zero upkeep.

Does SLAM replace safety sensors?

No. SLAM handles navigation. Safety comes from certified PLCs and dedicated safety lasers (often yellow) that kill motor power instantly if anything breaches the zone, no matter what the SLAM map shows.