Instance Segmentation

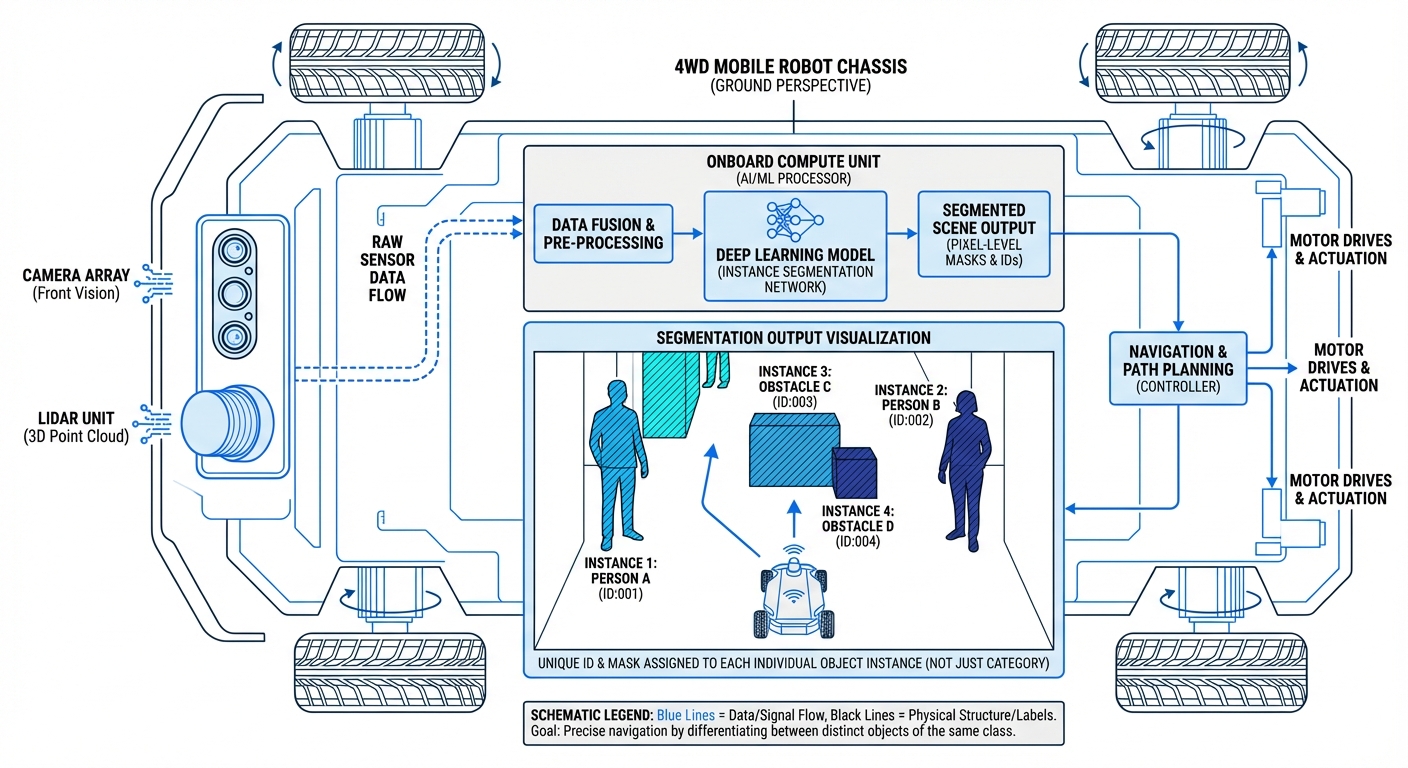

Give your mobile fleet pixel-perfect smarts. Instance segmentation lets AGVs spot objects and tell one from another in the same category, transforming picking precision and on-the-fly navigation.

Core Concepts

Pixel-Level Masks

Forget rough bounding boxes—instance segmentation gives every object pixel a binary mask, nailing its exact shape and edges for spot-on interactions.

Object Distinction

It tells 'Forklift A' apart from 'Forklift B.' Super important for predicting where multiple movers are headed in tight shared spaces.

Mask R-CNN & YOLO

Powered by cutting-edge stuff like Mask R-CNN or YOLOv8-seg, it strikes the right balance between speedy inference and spot-on segmentation on edge hardware.

Occlusion Handling

Smart algorithms can even guess an object's full shape when it's partly hidden by shelves or machines.

Grasping Point Analysis

Knowing the precise outline of an item lets robotic arms on mobile bases pinpoint reliable grab spots.

Hardware Acceleration

Fine-tuned for GPUs and TPUs, so heavy segmentation work won't slow down your critical safety navigation.

How It Works

Instance segmentation mixes object detection with semantic segmentation. First, the AGV's vision spots bounding boxes around key things like pallets, people, or barriers.

Inside each box, a Fully Convolutional Network (FCN) creates a binary mask, sorting every pixel as object or background.

You end up with a detailed semantic map where the robot knows a pixel blob isn't just 'obstacle'—it's specifically 'Pallet #43,' separate from 'Pallet #44' next door. This level of detail is a game-changer for grabbing stuff or squeezing through tight spots.

Real-World Applications

Warehouse Pallet Picking

AMRs rely on instance segmentation to pick out tightly packed pallets, grabbing just the right one without bumping neighbors.

Agricultural Robotics

In automated harvesting, it separates individual fruits or plants from the leaves, giving exact edges for gentle picking.

Human-Robot Collaboration

Safety setups track human workers' exact limb motions with segmentation, so robots only slow or detour when truly needed—keeping things moving fast.

Defect Detection

On assembly lines, inspection bots spot specific scratches or dents, and segmentation measures their size to gauge how bad they are.

Frequently Asked Questions

How does Instance Segmentation differ from Semantic Segmentation?

Semantic segmentation lumps all same-class objects together (like coloring every 'car' red). Instance segmentation picks them out individually ('car 1' red, 'car 2' blue)—crucial for counting or handling specific ones.

Does Instance Segmentation require heavy GPU resources?

It used to be a compute hog compared to basic bounding boxes, but now lightweight champs like YOLOv8-seg or FastSCNN run real-time on edge gear like NVIDIA Jetson for AGVs.

How does it handle overlapping objects (occlusion)?

These models learn to predict an object's full shape from what they see, figuring out edges even if it's half-hidden—perfect for messy warehouses.

What is the typical latency for onboard processing?

Latency varies by hardware and model, but on an NVIDIA Jetson Orin with a standard setup, you hit 30-60 FPS (16-33ms), plenty fast for warehouse AGV speeds.

Is annotation for training data more expensive?

Yes, labeling for instance segmentation means drawing tight polygons, not quick boxes—but synthetic data and auto-labelers are slashing that effort big time.

Can this technology work with transparent or reflective objects?

Transparent stuff like shrink wrap or glass trips up regular RGB cams. Fuse RGB with depth (RGB-D) or LiDAR intensity, and train on datasets full of those tricky materials.

How does lighting affect segmentation accuracy?

Like any vision system, wild contrast or pitch black can throw it off. But segmentation nets handle lighting shifts better than basic matchers since they learn shapes from context, not just colors or textures.

What is "Panoptic Segmentation" and should we use it?

Panoptic segmentation merges instance (trackable 'things') with semantic (background 'stuff' like floors/walls). For AGVs, it's the ultimate scene breakdown but hungrier on compute—ideal when you need navigation plus drivable area smarts at once.

Can we implement this on existing AGV hardware?

It hinges on your current setup. If your AGVs are stuck on old PLCs or basic micros, upgrade to AI-boosted boards like Jetson or TPU sticks to run those neural nets smoothly.

How reliable is it for safety-critical stop functions?

Even though AI-based segmentation is incredibly accurate, it's still probabilistic at heart. For safety certifications like ISO 13849, it's best used as a backup awareness layer alongside certified safety LiDAR scanners and PLCs, rather than relying on it alone as the main trigger.

Does it assist with SLAM (Simultaneous Localization and Mapping)?

Yep, that's how you get 'Semantic SLAM.' By spotting dynamic stuff like people or forklifts through segmentation, the SLAM algorithm filters them out of map updates, creating much cleaner, static maps ideal for long-term navigation.

What's the usual timeline for deploying a custom solution?

For a custom setup (say, unique packaging), the whole process—data collection, annotation, training, and fine-tuning—typically takes 4-8 weeks. But leveraging pre-trained models for standard objects like pallets or people can shrink that down to just days.