Domain Randomization

Close the 'Sim-to-Real' gap by drilling AGVs in wildly varied sim worlds. Domain Randomization makes your mobile robots tough enough for real-world chaos, slashing deployment hiccups.

Core Concepts

Visual Variance

Randomizing textures, colors, and lights stops the neural net from fixating on picky visuals, pushing it to grasp shapes and depth instead.

Dynamics Randomization

Tweak sim physics like friction, payload, and motor drag to keep policies sturdy even as hardware ages.

Sensor Noise

Add Gaussian noise to fake LIDAR and camera glitches, so the robot doesn't bank on flawless sim data.

Distractor Objects

Drop in random shapes and distractions to train AGVs spotting key nav targets amid clutter.

Procedural Environments

Ditch fixed maps—spawn walls and blocks on the fly. No memorizing layouts; just pure nav smarts.

Robust Transfer

Endgame: reality's just another sim variant. Nail extreme sim chaos, and the real world feels like a breeze.

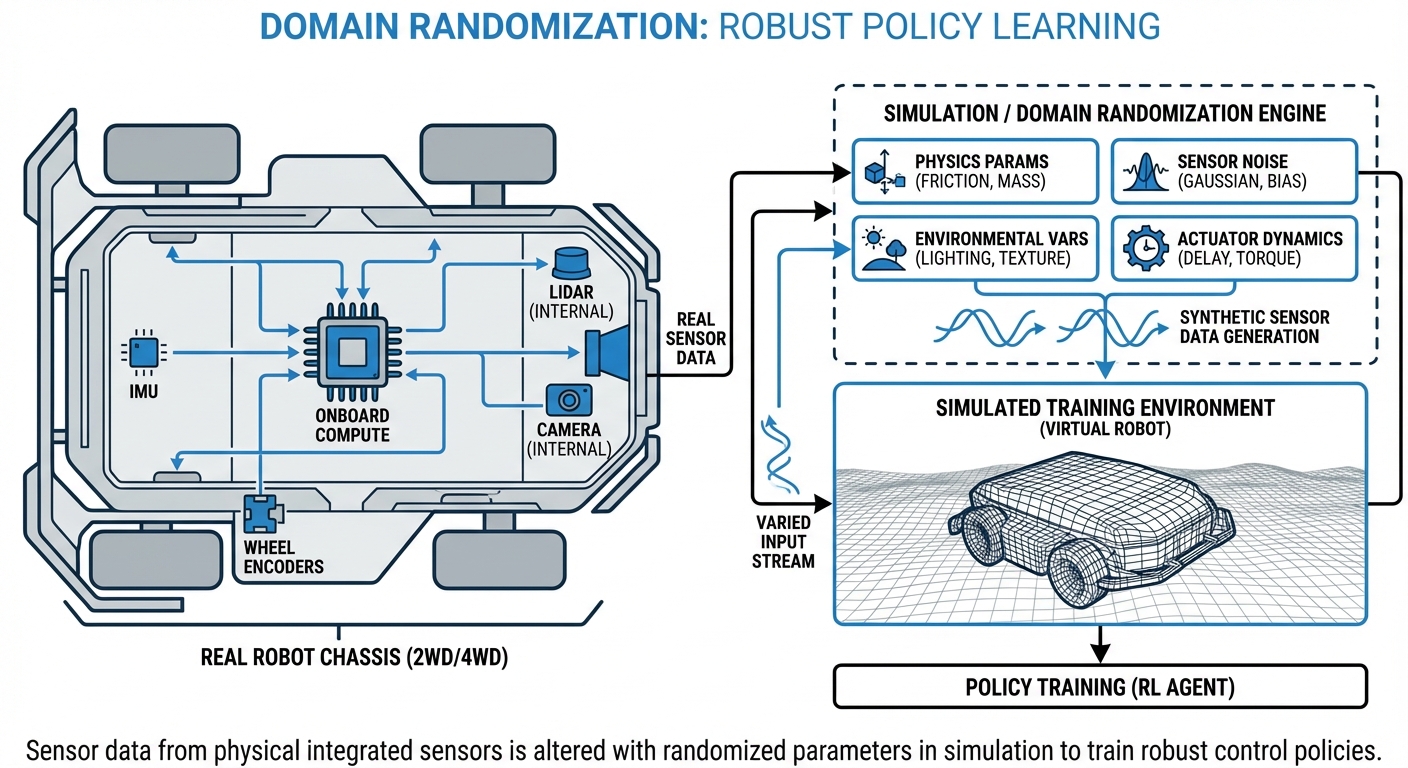

How It Works

Classic sim training flops because sims are too pristine. Real sensors grain out, motors lag, lights vary. Domain Randomization dirties it up on purpose.

Skip chasing one perfect photoreal sim (pricey and tough). Pump out thousands of wonky variants—floor colors, light blasts, wheel grip shifting every run.

By hitting the net with this parameter chaos, it zeros in on —the nav essentials—ditching the noise. On a real AGV, the world looks like any sim rollout it's mastered.

Real-World Applications

Warehouse Logistics

Warehouses deal with shifting lights (skylights to LEDs) and floor junk. Domain rand lets AGVs nail pallets no matter the box hue or shadows.

Outdoor Agriculture

Farm bots battle mud, rain, sun shifts, crop phases. Random terrain grip and leaf clutter preps them for the wild.

Last-Mile Delivery

Sidewalk bots hit varied pavement, snow, pop-up blocks. Heavy sim rand locks in steady paths over iffy ground.

Industrial Manufacturing

In busy factories where machines shift around and layouts change all the time, robots trained with geometry randomization can navigate safely without remaking maps for every tweak.

Frequently Asked Questions

What's the big difference between Domain Randomization and Photorealistic Simulation?

Photorealistic sims try to mirror reality pixel-for-pixel, but that's crazy expensive on compute and never quite perfect. Domain Randomization leans into the chaos—randomizing parameters all over the place to train the model to brush off those mismatches. It often beats chasing flawless visuals for building tough, reliable robot skills.

Does Domain Randomization increase training time?

Yes, usually. With environments always shifting, the neural net needs more training episodes to lock in a steady policy than in a fixed setup. But the payoff? A way tougher model that barely needs real-world tweaks.

What is Automatic Domain Randomization (ADR)?

ADR kicks it up: it automatically ramps up the randomization's difficulty and variety as the robot learns. No overwhelming it from the jump—instead, it builds a 'curriculum' that eases in the complexity.

Can you use this for non-visual sensors like LIDAR?

You bet. For LIDAR, we mix up dropout rates, distance noise, and surface reflectivity. For IMUs (accelerometers/gyroscopes), we tweak bias, white noise, and drift so localization doesn't blindly trust glitchy sensors.

How do we define the range of randomization parameters?

You need real expertise here. Ranges have to cover real-world values (like friction 0.5-0.8? Simulate 0.2-1.0). Too narrow, no real-world transfer; too wide, and the AI might not crack it.

What if the real world lands outside your randomized range?

That's 'out-of-distribution' trouble—the robot flops or acts erratic. Engineers fight it by padding ranges way wider than real life, for that safety buffer.

Is fine-tuning on real hardware still necessary?

Often yes, but way less than starting from zero. Domain Randomization gets you 90-95% there (zero-shot transfer). Just a quick real-world fine-tune polishes the moves and precision.

Does this work for Reinforcement Learning (RL) only?

It's huge in Deep RL, but also nails training Computer Vision models (like object detectors) with synthetic data—ditching the grind of labeling real-world pics.

What physics engines are best for Domain Randomization?

Pick high-speed sims with parallel sims. Industry faves: NVIDIA Isaac Sim, MuJoCo, PyBullet, Gazebo. Isaac Sim rocks for photoreal looks plus lightning physics.

How does this affect the cost of robot development?

Slashes hardware damage—crashes stay virtual. Skips massive labeled real datasets too. Trade-off? More compute for sim runs.

Can randomizing visual textures confuse the robot?

If it's just color-chasing ('follow the yellow line'), yeah, lighting wrecks it. But randomization pushes reliance on solid stuff like edges, depth, geometry—making real lights no biggie.

What is the "Reality Gap"?

The Reality Gap is sim physics/rendering vs. real physics mismatch. Main culprit for sim-trained bots failing IRL. Domain Randomization? Prime gap-closer.